Difference between revisions of "Multimedia Forensics"

| (7 intermediate revisions by 2 users not shown) | |||

| Line 3: | Line 3: | ||

== People == | == People == | ||

* [https://maarab-sfu.github.io Mohammad Amin Arab] | * [https://maarab-sfu.github.io Mohammad Amin Arab] | ||

| − | * Puria Azadi | + | * Ali Ghorbanpour |

| + | * Puria Azadi (graduated) | ||

* [https://www.cs.sfu.ca/~mhefeeda/ Mohamed Hefeeda] | * [https://www.cs.sfu.ca/~mhefeeda/ Mohamed Hefeeda] | ||

| + | |||

| + | |||

| + | '''FlexMark: Adaptive Watermarking Method for Images''' | ||

| + | |||

| + | {| | ||

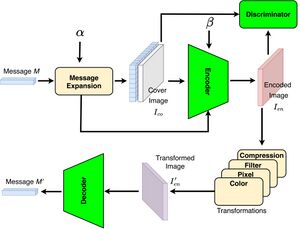

| + | | Most current watermarking methods offer low and fixed capacity, which means they can only embed small-size watermarks into images. Additionally, they are typically robust to only a small subset of the known image transformations (aka distortions) that occur during the processing, transmission, and storage of images. These shortcomings limit their adoption in many practical multimedia applications. We propose FlexMark, a robust and adaptive watermarking method for images, which achieves a better capacity-robustness trade-off than current methods and can easily be used for different applications. FlexMark categorizes and models the fundamental aspects of various image transformations, enabling it to achieve high accuracy in the presence of many practical transformations. | ||

| + | |||

| + | FlexMark introduces new ideas to further improve the performance, including double-embedding of the input message, employing self-attention layers to identify the most suitable regions in the image to embed the watermark bits, and utilization of a discriminator to improve the visual quality of watermarked images. In addition, FlexMark offers a parameter, α, to enable users to control the trade-off between robustness and capacity to meet the requirements of different applications. We implement FlexMark and assess its performance using datasets commonly used in this domain. Our results show that FlexMark is robust against a wide range of image transformations, including ones that were never seen during its training, which shows its generality and practicality. Our results also show that FlexMark substantially outperforms the closest methods in the literature in terms of capacity and robustness. | ||

| + | || | ||

| + | [[File:FlexMark.jpg|thumb|right]] | ||

| + | |} | ||

| Line 10: | Line 22: | ||

'''Revealing True Identity: Detecting Makeup Attacks in Face-based Biometric Systems''' | '''Revealing True Identity: Detecting Makeup Attacks in Face-based Biometric Systems''' | ||

| − | Face-based authentication systems are among the most | + | {| |

| + | | Face-based authentication systems are among the most used biometric systems, because of the ease of capturing face images in a non-intrusive way. These systems are, however, susceptible to various attacks, including printed faces, artificial masks, and makeup attacks. Makeup attacks are the hardest to detect in biometric systems because makeup can substantially alter the facial features of a person, including making them appear older/younger, modifying the shape of their eyebrows/beard/mustache, and changing the color of their lips and cheeks (Fig. 1). Makeup can even trick human agents trying to identify a person standing before them. | ||

| + | |||

| + | |||

| + | We proposed a novel solution to detect makeup attacks. The idea of our approach is to design a generative adversarial network (Figure 2) for removing makeup from face images while retaining their essential facial features and then comparing the face images before and after removing makeup. Our approach allows biometric systems to efficiently detect various combinations of makeup, which can be numerous and changing with time. This is in contrast to prior approaches, which require substantial amounts of “labeled data” to train the biometric system for all possible makeup combinations. This is difficult to achieve in practice and thus makes current biometric systems vulnerable to sophisticated makeup attacks. | ||

| + | || | ||

| + | [[File:TrueIdentity.png|thumb|right]] | ||

| + | |} | ||

| + | |||

| + | We collected a unique dataset of what we call malicious makeup, which is makeup purposely applied to deceive security systems, especially unattended ones where there are no humans to question the potentially weird looks of the makeup. Using extensive objective and subjective studies, we showed that our solution produces high detection accuracy and substantially outperforms the state-of-the-art. | ||

| + | |||

| + | This work was supported by '''IARPA''' through a sub-contract from the University of Southern California (USC), and it was done in collaboration with Dr. Wael Abd-Almageed and Dr. Mohamed Hussein of ISI at USC. | ||

== Code and Datasets == | == Code and Datasets == | ||

| − | + | https://github.com/maarab-sfu/FlexMark | |

| + | |||

| + | https://github.com/maarab-sfu/revealing-true-identity | ||

== Publications == | == Publications == | ||

| − | * M. Arab, P. Azadi Moghadam, M. Hussein, W. Abd-Almageed, and M. Hefeeda. [https://www2.cs.sfu.ca/~mhefeeda/Papers/mm20_makeup.pdf Revealing True Identity: Detecting Makeup Attacks in Face-based Biometric Systems], In Proc. of ACM Multimedia Conference (MM'20), Seattle, WA, October 2020. | + | * M. A. Arab, A. Ghorbanpour, and M. Hefeeda. [https://www2.cs.sfu.ca/~mhefeeda/Papers/mmsys24_flexmark.pdf FlexMark: Adaptive Watermarking Method for Images], In Proc. of Multimedia Systems Conference (MMSys'24), Bari, Italy, April 2024. |

| + | |||

| + | * M. A. Arab, P. Azadi Moghadam, M. Hussein, W. Abd-Almageed, and M. Hefeeda. [https://www2.cs.sfu.ca/~mhefeeda/Papers/mm20_makeup.pdf Revealing True Identity: Detecting Makeup Attacks in Face-based Biometric Systems], In Proc. of ACM Multimedia Conference (MM'20), Seattle, WA, October 2020. | ||

Latest revision as of 10:49, 22 August 2024

Recent advances in machine learning have made it easier to create fake images and videos. Users and computing systems are facing increasing difficulties in differentiating forged contents from original ones. In this project, we focus on various aspects of detecting fake content, including detecting makeup attacks in biometrics systems to identifying forged videos and images.

People

- Mohammad Amin Arab

- Ali Ghorbanpour

- Puria Azadi (graduated)

- Mohamed Hefeeda

FlexMark: Adaptive Watermarking Method for Images

| Most current watermarking methods offer low and fixed capacity, which means they can only embed small-size watermarks into images. Additionally, they are typically robust to only a small subset of the known image transformations (aka distortions) that occur during the processing, transmission, and storage of images. These shortcomings limit their adoption in many practical multimedia applications. We propose FlexMark, a robust and adaptive watermarking method for images, which achieves a better capacity-robustness trade-off than current methods and can easily be used for different applications. FlexMark categorizes and models the fundamental aspects of various image transformations, enabling it to achieve high accuracy in the presence of many practical transformations.

FlexMark introduces new ideas to further improve the performance, including double-embedding of the input message, employing self-attention layers to identify the most suitable regions in the image to embed the watermark bits, and utilization of a discriminator to improve the visual quality of watermarked images. In addition, FlexMark offers a parameter, α, to enable users to control the trade-off between robustness and capacity to meet the requirements of different applications. We implement FlexMark and assess its performance using datasets commonly used in this domain. Our results show that FlexMark is robust against a wide range of image transformations, including ones that were never seen during its training, which shows its generality and practicality. Our results also show that FlexMark substantially outperforms the closest methods in the literature in terms of capacity and robustness. |

Revealing True Identity: Detecting Makeup Attacks in Face-based Biometric Systems

| Face-based authentication systems are among the most used biometric systems, because of the ease of capturing face images in a non-intrusive way. These systems are, however, susceptible to various attacks, including printed faces, artificial masks, and makeup attacks. Makeup attacks are the hardest to detect in biometric systems because makeup can substantially alter the facial features of a person, including making them appear older/younger, modifying the shape of their eyebrows/beard/mustache, and changing the color of their lips and cheeks (Fig. 1). Makeup can even trick human agents trying to identify a person standing before them.

|

We collected a unique dataset of what we call malicious makeup, which is makeup purposely applied to deceive security systems, especially unattended ones where there are no humans to question the potentially weird looks of the makeup. Using extensive objective and subjective studies, we showed that our solution produces high detection accuracy and substantially outperforms the state-of-the-art.

This work was supported by IARPA through a sub-contract from the University of Southern California (USC), and it was done in collaboration with Dr. Wael Abd-Almageed and Dr. Mohamed Hussein of ISI at USC.

Code and Datasets

https://github.com/maarab-sfu/FlexMark

https://github.com/maarab-sfu/revealing-true-identity

Publications

- M. A. Arab, A. Ghorbanpour, and M. Hefeeda. FlexMark: Adaptive Watermarking Method for Images, In Proc. of Multimedia Systems Conference (MMSys'24), Bari, Italy, April 2024.

- M. A. Arab, P. Azadi Moghadam, M. Hussein, W. Abd-Almageed, and M. Hefeeda. Revealing True Identity: Detecting Makeup Attacks in Face-based Biometric Systems, In Proc. of ACM Multimedia Conference (MM'20), Seattle, WA, October 2020.