Difference between revisions of "Hyperspectral Imaging"

| (41 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | A hyperspectral camera captures a scene in many frequency bands across the spectrum, providing rich information and facilitating numerous applications in industrial, military, and commercial domains. Example applications of hyperspectral imaging include medical diagnosis (e.g., early detection of skin cancer), food-quality inspection, artwork authentication, forest monitoring, material identification, and remote sensing. The potential of hyperspectral imaging has been established for decades now. However, to date, hyperspectral imaging has only seen success in specialized and large-scale industrial and military applications. This is because of three main challenges: (i) the sheer volume of hyperspectral data which makes it hard to transmit such data in real-time and thus limiting its usefulness for many applications, (ii) the negative impact of the environmental conditions (e.g., rain, fog, and snow) which reduces the utility of the captured hyper-spectral data, and (iii) the high cost of hyperspectral cameras (upwards of $20K USD) which makes the technology out of reach for many commercial and end-user applications. The goal of this project is to address these challenges to enable wide adoption of hyperspectral imaging in many applications. | |

| + | |||

| + | |||

| + | '''MobiSpectral: Hyperspectral Imaging on Mobile Devices''' | ||

| + | |||

| + | Hyperspectral imaging systems capture information in multiple wavelength bands across the electromagnetic spectrum. These bands provide substantial details based on the optical properties of the materials present in the captured scene. The high cost of hyperspectral cameras and their strict illumination requirements make the technology out of reach for end-user and small-scale commercial applications. We propose MobiSpectral, which turns a low-cost phone into a simple hyperspectral imaging system, without any changes in the hardware. We design deep learning models that take regular RGB images and near-infrared (NIR) signals (which are used for face identification on recent phones) and reconstruct multiple hyperspectral bands in the visible and NIR ranges of the spectrum. Our experimental results show that MobiSpectral produces accurate bands that are comparable to ones captured by actual hyperspectral cameras. The availability of hyperspectral bands that reveal hidden information enables the development of novel mobile applications that are not currently possible. To demonstrate the potential of MobiSpectral, we use it to identify organic solid foods, which is a challenging food fraud problem that is currently partially addressed by laborious, unscalable, and expensive processes. We collect large datasets in real environments under diverse illumination conditions to evaluate MobiSpectral. Our results show that MobiSpectral can identify organic foods, e.g., apples, tomatoes, kiwis, strawberries, and blueberries, with an accuracy of up to 94% from images taken by phones. | ||

| + | |||

| + | <!-- | ||

| + | [[File:Mobispectral.png|thumb|center|700px|Overview of MobiSpectral]] | ||

| + | --> | ||

| + | |||

| + | |||

| + | '''MobiLyzer: Fine-grained Mobile Liquid Analyzer''' | ||

| + | |||

| + | Most current methods for liquid analysis and fraud detection rely on expensive tools and controlled lab environments, making them inaccessible to lay users. We present MobiLyzer, a mobile system that enables fine-grained liquid analysis on unmodified commodity smartphones in realistic environments such as homes and grocery stores. MobiLyzer conducts spectral analysis of liquids based on how their chemical components reflect different wavelengths. Conducting spectral analysis of liquids on smartphones, however, is challenging due to the limited sensing capabilities of smartphones and the heterogeneity in their camera designs. This is further complicated by the uncontrolled nature of ambient illumination and the diversity in liquid containers. The ambient illumination, for example, introduces distortions in measured spectra, and liquid containers cause specular reflections that degrade accuracy. To address these challenges, MobiLyzer utilizes RGB images captured by regular smartphone cameras. It then introduces intrinsic decomposition ideas to mitigate the effects of illumination and interference from liquid containers. It further leverages the near-infrared (NIR) sensors on smartphones to collect complementary signals in the NIR spectral range, partially mitigating the limited sensing capabilities of smartphones. It finally presents a new machine-learning model that reconstructs the entire spectrum in the visible and NIR ranges using the captured RGB and NIR images, which enables fine-grained spectral analysis of liquids on smartphones without the need for expensive equipment. Unlike prior models, the presented spectral reconstruction model preserves the original RGB colors during reconstruction, which is critical for liquid analysis since many liquids differ only in subtle spectral cues. We demonstrate the accuracy and robustness of MobiLyzer through extensive experiments with multiple liquids, four different smartphones, and seven illumination sources. Our results show, for example, that MobiLyzer can accurately detect adulteration with small ratios, identify quality grades of the same liquid (e.g., refined vs. extra virgin olive oil), differentiate the country of origin of oils (e.g., olive oil from Italy versus USA), and analyze the concentration of materials in liquids (e.g., protein concentration in urine for early detection of kidney diseases). | ||

| + | |||

| + | [[File:Overview.png|thumb|center|700px|Overview of MobiLyzer]] | ||

== People == | == People == | ||

| − | * | + | * [https://www.sfu.ca/~nsa84/ Neha Sharma] |

| − | * | + | * Muhammad Shahzaib Waseem |

| − | * [https:// | + | * [https://chercode.github.io/ Shahrzad Mirzaei] |

| + | * Mariam Bebawy | ||

| + | |||

| + | == Codes and Datasets == | ||

| + | |||

| + | * [https://github.com/chercode/mobilyzer-imwut25 MobiLyzer: Fine-grained Mobile Liquid Analyzer] | ||

| + | |||

| + | * [https://github.com/mariam-bebawy/glucosense-mobicom25 GlucoSense: Non-Invasive Glucose Monitoring using Mobile Devices] | ||

| + | |||

| + | * [https://github.com/ShahzaibWaseem/RipeTrack RipeTrack: Assessing Fruit Ripeness using Smartphones] | ||

| + | |||

| + | * [https://github.com/mobispectral/mobicom23_mobispectral MobiSpectral: Hyperspectral Imaging on Mobile Devices] | ||

| + | |||

| + | * [https://github.com/pazadimo/HS_In_Diverse_Illuminations Enabling Hyperspectral Imaging in Diverse Illumination Conditions for Indoor Applications] | ||

| + | |||

| + | * [https://github.com/nehasharma512/vein-visualization Hyperspectral Reconstruction from RGB Images for Vein Visualization] | ||

| + | |||

| + | * [https://github.com/maarab-sfu/BQSETHI Band and Quality Selection for Efficient Transmission of Hyperspectral Images] | ||

| + | |||

| + | == Publications == | ||

| + | |||

| + | * S. Mirzaei, M. Bebawy, A. M. Sharafeldin, and M. Hefeeda, [https://www2.cs.sfu.ca/~mhefeeda/Papers/imwut25_MobiLyzer.pdf MobiLyzer: Fine-grained Mobile Liquid Analyzer], Proc. ACM Interactive Mobile, Wearable, and Ubiquitous Technologies (IMWUT'25), vol. 9, no. 4, Article 201, pp. 1--37, December. 2025. | ||

| + | |||

| + | * M. Waseem, N. Sharma, M. Hefeeda, [https://www2.cs.sfu.ca/~mhefeeda/Papers/tmc25_RipeTrack.pdf RipeTrack: Assessing Fruit Ripeness and Remaining Lifetime using Smartphones], IEEE Transactions on Mobile Computing, pp. 1—16, Accepted, August 2025. | ||

| + | * N. Sharma, M. Bebawy, Y. Ng, and M. Hefeeda, [https://www2.cs.sfu.ca/~mhefeeda/Papers/mobicom25_GlucoSense.pdf GlucoSense: Non-Invasive Glucose Monitoring using Mobile Devices], In Proc. of ACM Conference on Mobile Computing and Networking (MobiCom'25), Hong Kong, China, November 2025. | ||

| − | + | * N. Sharma, M. Waseem, S. Mirzaei, and M. Hefeeda, [https://www2.cs.sfu.ca/~mhefeeda/Papers/mobiCom23_MobiSpectral.pdf MobiSpectral: Hyperspectral Imaging on Mobile Devices], In Proc. of ACM Conference on Mobile Computing and Networking (MobiCom'23), Madrid, Spain, October 2023. (Artifacts Evaluated and Functional) | |

| − | + | * P. Moghadam, N. Sharma, M. Hefeeda, [https://www2.cs.sfu.ca/~mhefeeda/Papers/mmsys21_HS_illumination.pdf Enabling Hyperspectral Imaging in Diverse Illumination Conditions for Indoor Applications], In Proc. of ACM Multimedia Systems Conference (MMSys’21), Istanbul, Turkey, September 2021. (Artifacts Evaluated and Functional) | |

| − | + | * N. Sharma, M. Hefeeda, [https://www2.cs.sfu.ca/~mhefeeda/Papers/mmsys20_vein.pdf Hyperspectral Reconstruction from RGB Images for Vein Visualization], In Proc. of ACM Multimedia Systems Conference (MMSys'20), Istanbul, Turkey, June 2020. (Artifacts Evaluated and Functional) | |

| − | [ | + | * M. Arab, K. Calagari, M. Hefeeda, [https://www2.cs.sfu.ca/~mhefeeda/Papers/mm19_bandSelection.pdf Band and Quality Selection for Efficient Transmission of Hyperspectral Images], In Proc. of ACM Conference on Multimedia (MM'19), Nice, France. October 2019. |

Latest revision as of 22:55, 11 November 2025

A hyperspectral camera captures a scene in many frequency bands across the spectrum, providing rich information and facilitating numerous applications in industrial, military, and commercial domains. Example applications of hyperspectral imaging include medical diagnosis (e.g., early detection of skin cancer), food-quality inspection, artwork authentication, forest monitoring, material identification, and remote sensing. The potential of hyperspectral imaging has been established for decades now. However, to date, hyperspectral imaging has only seen success in specialized and large-scale industrial and military applications. This is because of three main challenges: (i) the sheer volume of hyperspectral data which makes it hard to transmit such data in real-time and thus limiting its usefulness for many applications, (ii) the negative impact of the environmental conditions (e.g., rain, fog, and snow) which reduces the utility of the captured hyper-spectral data, and (iii) the high cost of hyperspectral cameras (upwards of $20K USD) which makes the technology out of reach for many commercial and end-user applications. The goal of this project is to address these challenges to enable wide adoption of hyperspectral imaging in many applications.

MobiSpectral: Hyperspectral Imaging on Mobile Devices

Hyperspectral imaging systems capture information in multiple wavelength bands across the electromagnetic spectrum. These bands provide substantial details based on the optical properties of the materials present in the captured scene. The high cost of hyperspectral cameras and their strict illumination requirements make the technology out of reach for end-user and small-scale commercial applications. We propose MobiSpectral, which turns a low-cost phone into a simple hyperspectral imaging system, without any changes in the hardware. We design deep learning models that take regular RGB images and near-infrared (NIR) signals (which are used for face identification on recent phones) and reconstruct multiple hyperspectral bands in the visible and NIR ranges of the spectrum. Our experimental results show that MobiSpectral produces accurate bands that are comparable to ones captured by actual hyperspectral cameras. The availability of hyperspectral bands that reveal hidden information enables the development of novel mobile applications that are not currently possible. To demonstrate the potential of MobiSpectral, we use it to identify organic solid foods, which is a challenging food fraud problem that is currently partially addressed by laborious, unscalable, and expensive processes. We collect large datasets in real environments under diverse illumination conditions to evaluate MobiSpectral. Our results show that MobiSpectral can identify organic foods, e.g., apples, tomatoes, kiwis, strawberries, and blueberries, with an accuracy of up to 94% from images taken by phones.

MobiLyzer: Fine-grained Mobile Liquid Analyzer

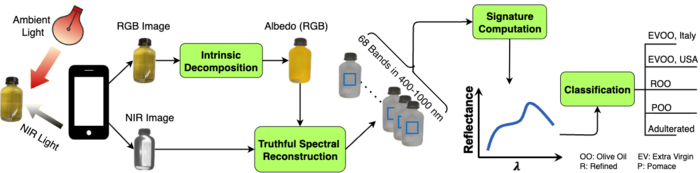

Most current methods for liquid analysis and fraud detection rely on expensive tools and controlled lab environments, making them inaccessible to lay users. We present MobiLyzer, a mobile system that enables fine-grained liquid analysis on unmodified commodity smartphones in realistic environments such as homes and grocery stores. MobiLyzer conducts spectral analysis of liquids based on how their chemical components reflect different wavelengths. Conducting spectral analysis of liquids on smartphones, however, is challenging due to the limited sensing capabilities of smartphones and the heterogeneity in their camera designs. This is further complicated by the uncontrolled nature of ambient illumination and the diversity in liquid containers. The ambient illumination, for example, introduces distortions in measured spectra, and liquid containers cause specular reflections that degrade accuracy. To address these challenges, MobiLyzer utilizes RGB images captured by regular smartphone cameras. It then introduces intrinsic decomposition ideas to mitigate the effects of illumination and interference from liquid containers. It further leverages the near-infrared (NIR) sensors on smartphones to collect complementary signals in the NIR spectral range, partially mitigating the limited sensing capabilities of smartphones. It finally presents a new machine-learning model that reconstructs the entire spectrum in the visible and NIR ranges using the captured RGB and NIR images, which enables fine-grained spectral analysis of liquids on smartphones without the need for expensive equipment. Unlike prior models, the presented spectral reconstruction model preserves the original RGB colors during reconstruction, which is critical for liquid analysis since many liquids differ only in subtle spectral cues. We demonstrate the accuracy and robustness of MobiLyzer through extensive experiments with multiple liquids, four different smartphones, and seven illumination sources. Our results show, for example, that MobiLyzer can accurately detect adulteration with small ratios, identify quality grades of the same liquid (e.g., refined vs. extra virgin olive oil), differentiate the country of origin of oils (e.g., olive oil from Italy versus USA), and analyze the concentration of materials in liquids (e.g., protein concentration in urine for early detection of kidney diseases).

People

- Neha Sharma

- Muhammad Shahzaib Waseem

- Shahrzad Mirzaei

- Mariam Bebawy

Codes and Datasets

Publications

- S. Mirzaei, M. Bebawy, A. M. Sharafeldin, and M. Hefeeda, MobiLyzer: Fine-grained Mobile Liquid Analyzer, Proc. ACM Interactive Mobile, Wearable, and Ubiquitous Technologies (IMWUT'25), vol. 9, no. 4, Article 201, pp. 1--37, December. 2025.

- M. Waseem, N. Sharma, M. Hefeeda, RipeTrack: Assessing Fruit Ripeness and Remaining Lifetime using Smartphones, IEEE Transactions on Mobile Computing, pp. 1—16, Accepted, August 2025.

- N. Sharma, M. Bebawy, Y. Ng, and M. Hefeeda, GlucoSense: Non-Invasive Glucose Monitoring using Mobile Devices, In Proc. of ACM Conference on Mobile Computing and Networking (MobiCom'25), Hong Kong, China, November 2025.

- N. Sharma, M. Waseem, S. Mirzaei, and M. Hefeeda, MobiSpectral: Hyperspectral Imaging on Mobile Devices, In Proc. of ACM Conference on Mobile Computing and Networking (MobiCom'23), Madrid, Spain, October 2023. (Artifacts Evaluated and Functional)

- P. Moghadam, N. Sharma, M. Hefeeda, Enabling Hyperspectral Imaging in Diverse Illumination Conditions for Indoor Applications, In Proc. of ACM Multimedia Systems Conference (MMSys’21), Istanbul, Turkey, September 2021. (Artifacts Evaluated and Functional)

- N. Sharma, M. Hefeeda, Hyperspectral Reconstruction from RGB Images for Vein Visualization, In Proc. of ACM Multimedia Systems Conference (MMSys'20), Istanbul, Turkey, June 2020. (Artifacts Evaluated and Functional)

- M. Arab, K. Calagari, M. Hefeeda, Band and Quality Selection for Efficient Transmission of Hyperspectral Images, In Proc. of ACM Conference on Multimedia (MM'19), Nice, France. October 2019.