Difference between revisions of "Modeling and Caching of P2P Traffic"

| (34 intermediate revisions by 4 users not shown) | |||

| Line 5: | Line 5: | ||

latency. | latency. | ||

| − | We are developing caching algorithms that capitalize on the P2P traffic characteristics. We are also exploring the potential of cooperative caching of P2P traffic, where multiple caches deployed in different ASes (which could have a peering | + | We are developing caching algorithms that capitalize on the P2P traffic characteristics. We are also exploring the potential of cooperative caching of P2P traffic, where multiple caches deployed in different ASes (which could have a peering relationship) or within a large AS (e.g., a Tier-1 ISP) cooperate to serve traffic from each other`s clients. Cooperation reduces the load on expensive inter-ISP links. Furthermore, we are implementing all of our algorithms and ideas in a prototype caching system. |

| Line 12: | Line 12: | ||

* [http://www.cs.sfu.ca/~mhefeeda/ Mohamed Hefeeda] | * [http://www.cs.sfu.ca/~mhefeeda/ Mohamed Hefeeda] | ||

| − | * | + | * [http://www.sfu.ca/~cha16/ ChengHsin Hsu (PhD student)] |

| − | * Kianoosh Mokhtarian (MSc Student) | + | * [http://www.cs.sfu.ca/~kma26/personal/ Kianoosh Mokhtarian (MSc Student)] |

* Behrooz Noorizadeh (MSc Student, Graduated Fall 2007) | * Behrooz Noorizadeh (MSc Student, Graduated Fall 2007) | ||

| Line 23: | Line 23: | ||

== Publications == | == Publications == | ||

| − | * M. Hefeeda and | + | * M. Hefeeda, C. Hsu, and K. Mokhtarian, [http://www.cs.sfu.ca/~mhefeeda/Papers/tc11.pdf Design and Evaluation of a Proxy Cache for Peer to Peer Traffic], ''IEEE Transactions on Computers'', 60(7), pp. 964--977, July 2011. |

| − | * M. Hefeeda and B. Noorizadeh, Cooperative Caching | + | * M. Hefeeda and B. Noorizadeh, [http://www.cs.sfu.ca/~mhefeeda/Papers/tpds10_caching.pdf On the Benefits of Cooperative Proxy Caching for Peer-to-Peer Traffic], ''IEEE Transactions on Parallel and Distributed Systems'', 21(7), pp. 998--1010, July 2010.. |

| − | * O. Saleh and M. Hefeeda, [http://www.cs.sfu.ca/~mhefeeda/Papers/icnp06.pdf Modeling and Caching of Peer-to-Peer Traffic] , In Proc. of IEEE International Conference on Network Protocols (ICNP'06), pp. 249--258, Santa Barbara, CA, November 2006. (Acceptance: 14%) | + | * M. Hefeeda and O. Saleh, [http://www.cs.sfu.ca/~mhefeeda/Papers/ton08.pdf Traffic Modeling and Proportional Partial Caching for Peer-to-Peer Systems], ''IEEE/ACM Transactions on Networking,'' 16(6), pp. 1447--1460, December 2008. |

| + | |||

| + | * M. Hefeeda, C. Hsu, and K. Mokhtarian, [http://www.cs.sfu.ca/~mhefeeda/Papers/sigcomm08demo_abstract.pdf pCache: A Proxy Cache for Peer-to-Peer Traffic], ACM SIGCOMM'08 Technical Demonstration, Seattle, WA, August 2008. [[http://www.cs.sfu.ca/~mhefeeda/Papers/sigcomm08demo.pdf Poster: pdf]] [[http://www.cs.sfu.ca/~mhefeeda/Papers/sigcomm08demo.ppt Poster: ppt]]. | ||

| + | |||

| + | * M. Hefeeda and B. Noorizadeh, [http://www.cs.sfu.ca/~mhefeeda/Papers/lcn08.pdf Cooperative Caching: The Case for P2P Traffic], In Proc. of IEEE Conference on Local Computer Networks (LCN'08), Montreal, Canada, October 2008. | ||

| + | |||

| + | * O. Saleh and M. Hefeeda, [http://www.cs.sfu.ca/~mhefeeda/Papers/icnp06.pdf Modeling and Caching of Peer-to-Peer Traffic] , In Proc. of IEEE International Conference on Network Protocols (ICNP'06), pp. 249--258, Santa Barbara, CA, November 2006. (Acceptance: 14%) | ||

== pCache Software == | == pCache Software == | ||

| − | + | [[Image:caching.jpg|frame|right|P2P Caching]] | |

| − | |||

We have designed and implemented a proxy cache for P2P traffic, which we call | We have designed and implemented a proxy cache for P2P traffic, which we call | ||

pCache. pCache is to be deployed by autonomous systems (ASes) or ISPs that | pCache. pCache is to be deployed by autonomous systems (ASes) or ISPs that | ||

| Line 59: | Line 64: | ||

lead to decreased availability of peers and the content stored on them. | lead to decreased availability of peers and the content stored on them. | ||

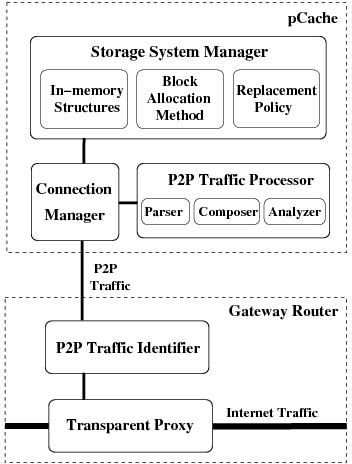

| + | The following figure demonstrates the main components of pCache. A brief description | ||

| + | of each component is given in [[pCacheOverview|pCache overview page]]. | ||

| + | Detailed design and performance evaluations are presented in | ||

| + | our IEEE Transactions on Computers paper. | ||

| − | + | [[Image:pCache-design.jpg|center|Components]] | |

| − | === | + | === Browse and Download Code === |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | This project has been concluded and no future releases are scheduled. [https://cs-nsl-svn.cs.surrey.sfu.ca/cssvn/nsl-projects/P2P/p2pCache/code The SVN repository is here.] (for local access only). | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | pCache code is released under [http://www.gnu.org/licenses/gpl-3.0.txt GPLv3]. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | pCache has two parts: Patched Linux Kernel and pCache Application. The Patched Linux Kernel consists of all required patches to support transparent proxy, which simplifies setting up required environment. This patched kernel contains code from [http://www.kernel.org/ mainstream Linux kernel], [http://www.netfilter.org/ netfilter], [http://www.balabit.com/support/community/products/tproxy/ tproxy], and [http://www.linux-l7sw.org/ layer7switch]. The pCache Application implements components described above. Moreover, a patched iptables is also provided that takes additional arguments supported by [http://www.balabit.com/support/community/products/tproxy/ tproxy]. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | * Patched Linux Kernel [[media:linux-2.6.23.tgz]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | * pCache Application Source Code [[media:pCache-0.0.1.tgz]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | * iptables (patched for additional araguments) [[media:iptables-1.4.0rc1.tgz]] | ||

| − | |||

| − | + | To setup your pCache system, please follow these simple steps: | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | * Download the Patched Linux Kernel, compile and install it. Notice that, this tar file also include a sample .config file. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | * Download the patched iptables, compile and install it. (Please see the INSTALL file included in the tar file for installation instruction) | ||

| − | + | * Download the pCache Application source code, compile it for a binary called pCache. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | * Download the pCache source code, compile it for a binary called pCache. | ||

To run pCache, first configure forwarding table. For example, we use the following script to configure our forwarding table: | To run pCache, first configure forwarding table. For example, we use the following script to configure our forwarding table: | ||

| Line 326: | Line 127: | ||

After setting up the conf.txt file, run pCache in command-line. A log.txt will be generated for detailed debugging messages. pCache also provides a Web-based monitoring interface, which can be access by connecting to ''http://<your-up>:8000'' using any Web browser. The interface looks like: | After setting up the conf.txt file, run pCache in command-line. A log.txt will be generated for detailed debugging messages. pCache also provides a Web-based monitoring interface, which can be access by connecting to ''http://<your-up>:8000'' using any Web browser. The interface looks like: | ||

| − | [[Image:pCache_Web.jpg|center|border| | + | [[Image:pCache_Web.jpg|center|border|576px]] |

Meanwhile, pCache also includes an applet to report real-time events. This applet can be launched by clicking the ''Details'' button. The applet looks like: | Meanwhile, pCache also includes an applet to report real-time events. This applet can be launched by clicking the ''Details'' button. The applet looks like: | ||

| − | [[Image:pCache_Applet.jpg|center|border| | + | [[Image:pCache_Applet.jpg|center|border|402px]] |

=== Future Enhancements === | === Future Enhancements === | ||

| − | + | The following is list of possible improvements to pCache. The items are roughly listed by priority. We also list the | |

| − | + | person-day estimation on each item, considering a good graduate student who works 8 hours a day. The estimations include unit-tests. | |

| − | |||

| − | + | #Nonvolatile storage system: To keep cached data across reboots, we need to write in-memory metainfo to the super blocks (in pCache file systems). This require save(...)/restore(...) functions for a few hashtables in-memory. This task needs 4~5 ppl-day. | |

| + | #Proxy performance: We should integrate our code with the latest tproxy for better performance (we can ignore the tcp splicing part for now). After integration, we should quantify the performance of tproxy (by emulating a large number of P2P clients in two private subnets). If possible we can identify the bottlenecks in tproxy, and improve it. We then can contribute the code back to the community. This can be a small/side research project. TProxy integration takes 1 ppl-day. Designing/Implementing the emulation and getting a write-up on comparison and bottleneck analysis takes 5~10 ppl-day. | ||

| + | #Event-driven connection manager: We should define a stateful connection class, rewrite the connection manager into an event handler, use epoll (for network) and aio (for disk) to improve scalability. Finally, a test similar to the one in TProxy test should be performed. Designing it takes 4 ppl-day. Implementing it takes 8~10 ppl-day. Evaluating it takes 3 ppl-day, assuming we have gained experiences from evaluating TProxy. | ||

| + | #Simpler segment matching: For every incoming request, we either request it in its entirety or we don't request it at all. Current partial request code is over-complicated.This takes 1 ppl-day, but may depend on (overlap with) event-driven connection manager. | ||

| + | #Improve the compatibility: Identify the unsupported BT/Gnutella clients, and locate the root causes (which message types cause the problem). Then fix it. I imagine that this will take some time. I cannot come up with a time estimation as of now. | ||

| + | #Better logging system: We currently use a home-made logging system, but in an inconsistent way: some modules log through stderr rather than the logging system. If time permitted, we may switch to an open-source logging library similar to log4c. This takes 5~7 ppl-day, given that there are many logging statements in the system. | ||

| − | |||

=== Feedback and Comments === | === Feedback and Comments === | ||

We welcome all comments and suggestions. You can enter your comments [http://www.sfu.ca/~cha16/feedback.html here]. | We welcome all comments and suggestions. You can enter your comments [http://www.sfu.ca/~cha16/feedback.html here]. | ||

| + | |||

=== Related Caching Systems and Commercial Products === | === Related Caching Systems and Commercial Products === | ||

| Line 351: | Line 156: | ||

Not to mention that our prototype software is open-source, while the above products are commercial and very expensive. | Not to mention that our prototype software is open-source, while the above products are commercial and very expensive. | ||

| + | |||

| + | === On-going Research Problems=== | ||

| + | |||

| + | We list the current research problems and draft solutions [[Private:pCache_progress|a separate document (login required)]]. | ||

== P2P Traffic Traces == | == P2P Traffic Traces == | ||

| − | * | + | * Brief description of our traces can be found in this [http://nsl.cs.surrey.sfu.ca/projects/p2p/traces_readme.txt readme.txt] file. The trace files can be downloaded [http://nsl.cs.sfu.ca/traces/p2p/anonymized/ here]. |

Latest revision as of 02:45, 21 January 2015

Peer-to-peer (P2P) file sharing systems generate a major portion of the Internet traffic, and this portion is expected to increase in the future. The sheer volume and expected high growth of P2P traffic have negative consequences, including: (i) significantly increased load on the Internet backbone, hence, higher chances of congestion; and (ii) increased cost on Internet Service Providers (ISPs), hence, higher service charges for all Internet users.

A potential solution for alleviating those negative impacts is to cache a fraction of the P2P traffic such that future requests for the same objects could be served from a cache in the requester’s autonomous system (AS). Caching in the Internet has mainly been considered for web and video streaming traffic, with little attention to the P2P traffic. Many caching algorithms for web traffic and for video streaming systems have been proposed and analyzed. Directly applying such algorithms to cache P2P traffic may not yield the best cache performance, because of the different traffic characteristics and caching objectives. For instance, reducing user-perceived access latency is a key objective for web caches. Consequently, web caching algorithms often incorporate information about the cost (latency) of a cache miss when deciding which object to cache/evict. Although latency is important to P2P users, the goal of a P2P cache is often focused on the ISP’s primary concern; namely, the amount of bandwidth consumed by large P2P transfers. Consequently, the byte hit rate, i.e., the number of bytes served from the cache to the total number of transfered bytes, is more important than latency.

We are developing caching algorithms that capitalize on the P2P traffic characteristics. We are also exploring the potential of cooperative caching of P2P traffic, where multiple caches deployed in different ASes (which could have a peering relationship) or within a large AS (e.g., a Tier-1 ISP) cooperate to serve traffic from each other`s clients. Cooperation reduces the load on expensive inter-ISP links. Furthermore, we are implementing all of our algorithms and ideas in a prototype caching system.

People

- Behrooz Noorizadeh (MSc Student, Graduated Fall 2007)

- Osama Saleh (MSc Student, Graduated Fall 2006)

Publications

- M. Hefeeda, C. Hsu, and K. Mokhtarian, Design and Evaluation of a Proxy Cache for Peer to Peer Traffic, IEEE Transactions on Computers, 60(7), pp. 964--977, July 2011.

- M. Hefeeda and B. Noorizadeh, On the Benefits of Cooperative Proxy Caching for Peer-to-Peer Traffic, IEEE Transactions on Parallel and Distributed Systems, 21(7), pp. 998--1010, July 2010..

- M. Hefeeda and O. Saleh, Traffic Modeling and Proportional Partial Caching for Peer-to-Peer Systems, IEEE/ACM Transactions on Networking, 16(6), pp. 1447--1460, December 2008.

- M. Hefeeda, C. Hsu, and K. Mokhtarian, pCache: A Proxy Cache for Peer-to-Peer Traffic, ACM SIGCOMM'08 Technical Demonstration, Seattle, WA, August 2008. [Poster: pdf] [Poster: ppt].

- M. Hefeeda and B. Noorizadeh, Cooperative Caching: The Case for P2P Traffic, In Proc. of IEEE Conference on Local Computer Networks (LCN'08), Montreal, Canada, October 2008.

- O. Saleh and M. Hefeeda, Modeling and Caching of Peer-to-Peer Traffic , In Proc. of IEEE International Conference on Network Protocols (ICNP'06), pp. 249--258, Santa Barbara, CA, November 2006. (Acceptance: 14%)

pCache Software

We have designed and implemented a proxy cache for P2P traffic, which we call pCache. pCache is to be deployed by autonomous systems (ASes) or ISPs that are interested in reducing the burden of P2P traffic. pCache would be deployed at or near the gateway router of an AS. At a high-level, a client participating in a particular P2P network issues a request to download an object. This request is intercepted by pCache. If the requested object or parts of it are stored in the cache, they are served to the requesting client. This saves bandwidth on the external (expensive) links to the Internet. If a part of the requested object is not found in the cache, the request is forwarded to the P2P network. When the response comes back, pCache may store a copy of the object for future requests from other clients in its AS. Clients inside the AS as well as external clients are not aware of pCache, i.e., pCache is fully-transparent in both directions.

Our C++ implementation of pCache has more than 11,000 lines of code. We have rigorously validated and evaluated the performance of pCache as well as its impacts on ISPs and clients. Our experimental results show that pCache benefits both the clients and the ISP in which the cache is deployed, without hurting the performance of the P2P networks. Specifically, clients behind the cache achieve much higher download speeds than other clients running in the same conditions without the cache. In addition, a significant portion of the traffic is served from the cache, which reduces the load on the expensive WAN links for the ISP. Our results also show that the cache does not reduce the connectivity of clients behind it, nor does it reduce their upload speeds. This is important for the whole P2P network, because reduced connectivity could lead to decreased availability of peers and the content stored on them.

The following figure demonstrates the main components of pCache. A brief description of each component is given in pCache overview page. Detailed design and performance evaluations are presented in our IEEE Transactions on Computers paper.

Browse and Download Code

This project has been concluded and no future releases are scheduled. The SVN repository is here. (for local access only).

pCache code is released under GPLv3.

pCache has two parts: Patched Linux Kernel and pCache Application. The Patched Linux Kernel consists of all required patches to support transparent proxy, which simplifies setting up required environment. This patched kernel contains code from mainstream Linux kernel, netfilter, tproxy, and layer7switch. The pCache Application implements components described above. Moreover, a patched iptables is also provided that takes additional arguments supported by tproxy.

- Patched Linux Kernel media:linux-2.6.23.tgz

- pCache Application Source Code media:pCache-0.0.1.tgz

- iptables (patched for additional araguments) media:iptables-1.4.0rc1.tgz

To setup your pCache system, please follow these simple steps:

- Download the Patched Linux Kernel, compile and install it. Notice that, this tar file also include a sample .config file.

- Download the patched iptables, compile and install it. (Please see the INSTALL file included in the tar file for installation instruction)

- Download the pCache Application source code, compile it for a binary called pCache.

To run pCache, first configure forwarding table. For example, we use the following script to configure our forwarding table:

iptables -t mangle -N DIVERT # bypass low ports iptables -t mangle -A PREROUTING -p tcp --sport 1:1024 -j ACCEPT iptables -t mangle -A PREROUTING -p tcp --dport 1:1024 -j ACCEPT iptables -t mangle -A PREROUTING -p tcp -m socket -j DIVERT iptables -t mangle -A PREROUTING -p tcp -j TPROXY --tproxy-mark 0x1/0x1 --on-port 7072 iptables -t mangle -A DIVERT -j MARK --set-mark 1 iptables -t mangle -A DIVERT -j ACCEPT ip rule add fwmark 1 lookup 100 ip route add local 0.0.0.0/0 dev lo table 100 # disable TCP features that are not suppoert by the TCP splicing module sysctl -w net.ipv4.tcp_sack=0 sysctl -w net.ipv4.tcp_dsack=0 sysctl -w net.ipv4.tcp_window_scaling=0 #sysctl -w net.ipv4.tcp_tw_recycle=1 #echo 8 > /proc/sys/kernel/printk

Then, configure the conf.txt file under the pCache directory. See comments in conf.txt for purpose of each setting. The most important ones are briefly described below. We notice that many settings are for experimental usage and are not useful in actual deployment.

- BLOCK_SIZE and BLOCK_NUM determine the layout of harddisk as well as the capacity. The resulted harddisk size should never exceed the actual size.

- ACCESOR_TYPE describes the harddisk scheme that will be used. The following harddisk schemes are supports: flat directory (1), signal file on file system (4), and signal file on raw disk (5). Other types are experimental only.

- ROOT_DIR or DEV_NAME defines the location of file system. DEV_NAME is used for raw disk harddisk scheme, and ROOT_DIR is for all other. Examples of DEV_NAME include /dev/hda2 and /dev/sda1. Examples of ROOT_DIR include /mnt/pCache, which will need to be mounted first.

- SUBNET and NETMASK define the local subnet. pCache only inspect outgoing requests. The incoming requests are always forwarded.

- MAX_FILLED_SIZE and MIN_FILLED_SIZE determine when to call cache replacement routine and when to stop.

After setting up the conf.txt file, run pCache in command-line. A log.txt will be generated for detailed debugging messages. pCache also provides a Web-based monitoring interface, which can be access by connecting to http://<your-up>:8000 using any Web browser. The interface looks like:

Meanwhile, pCache also includes an applet to report real-time events. This applet can be launched by clicking the Details button. The applet looks like:

Future Enhancements

The following is list of possible improvements to pCache. The items are roughly listed by priority. We also list the person-day estimation on each item, considering a good graduate student who works 8 hours a day. The estimations include unit-tests.

- Nonvolatile storage system: To keep cached data across reboots, we need to write in-memory metainfo to the super blocks (in pCache file systems). This require save(...)/restore(...) functions for a few hashtables in-memory. This task needs 4~5 ppl-day.

- Proxy performance: We should integrate our code with the latest tproxy for better performance (we can ignore the tcp splicing part for now). After integration, we should quantify the performance of tproxy (by emulating a large number of P2P clients in two private subnets). If possible we can identify the bottlenecks in tproxy, and improve it. We then can contribute the code back to the community. This can be a small/side research project. TProxy integration takes 1 ppl-day. Designing/Implementing the emulation and getting a write-up on comparison and bottleneck analysis takes 5~10 ppl-day.

- Event-driven connection manager: We should define a stateful connection class, rewrite the connection manager into an event handler, use epoll (for network) and aio (for disk) to improve scalability. Finally, a test similar to the one in TProxy test should be performed. Designing it takes 4 ppl-day. Implementing it takes 8~10 ppl-day. Evaluating it takes 3 ppl-day, assuming we have gained experiences from evaluating TProxy.

- Simpler segment matching: For every incoming request, we either request it in its entirety or we don't request it at all. Current partial request code is over-complicated.This takes 1 ppl-day, but may depend on (overlap with) event-driven connection manager.

- Improve the compatibility: Identify the unsupported BT/Gnutella clients, and locate the root causes (which message types cause the problem). Then fix it. I imagine that this will take some time. I cannot come up with a time estimation as of now.

- Better logging system: We currently use a home-made logging system, but in an inconsistent way: some modules log through stderr rather than the logging system. If time permitted, we may switch to an open-source logging library similar to log4c. This takes 5~7 ppl-day, given that there are many logging statements in the system.

Feedback and Comments

We welcome all comments and suggestions. You can enter your comments here.

Related Caching Systems and Commercial Products

- [OverCache P2P Caching and Delivery Platform] Oversi's MSP platform realizes multi-service caching for P2P and other applications. In terms of P2P caching, MSP takes a quite different approach than pCache: An MSP device actively participates in P2P networks. That is, MSP acts as a ultra-peer that only serve peers within the deployed ISP. We believe this approach negatively impacts fairness in many P2P networks, such as BitTorrent, which employ algorithms to eliminate free-rider problem. In fact, no peers in ISPs with Oversi's MSP deployed will ever upload anymore, because they expect to get the data free from the MSP platform. Once number of free-riders increases, the P2P network performance degrades, which in turns affects P2P users all over the world.

- [PeerApp UltraBand Family] Unlike OverCache, PeerApp's products support transparent caching of P2P traffic. Supported P2P protocols are BitTorrent, Gnutella, eDonkey, and FastTrack (the last two are no more popular). However, like OverCache, PeerApp's products do not support cross-protocol caching; a file cached through a BitTorrent download will not be served to a Gnutella user requesting the same file (or vice versa). Currently, we have provided basic means for supporting cross-protocol caching, and this feature will be fully added to the next version of our prototype software.

Not to mention that our prototype software is open-source, while the above products are commercial and very expensive.

On-going Research Problems

We list the current research problems and draft solutions a separate document (login required).

P2P Traffic Traces

- Brief description of our traces can be found in this readme.txt file. The trace files can be downloaded here.