Difference between revisions of "Private:Ahmed Reading Summaries"

| (33 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

Will summarize the readings here.. | Will summarize the readings here.. | ||

| + | |||

| + | == Android Development == | ||

| + | |||

| + | * [http://www.androidx86.org Androidx86] An x86 port of Android | ||

| + | * [http://code.google.com/p/live-android/ LiveAndroid] A LiveCD of Android | ||

| + | * [http://code.google.com/p/0xdroid/ 0xdroid] is a community-developed Android distribution by 0xlab (Note: it has a [http://gitorious.org/0xdroid/external_libsdl-12 port of SDL] to Android) | ||

| + | * [http://www.airplaysdk.com/index.php AirPlay SDK for iPhone and Android] | ||

| + | * [http://jiggawatt.org/badc0de/android/index.html Native application development for Android (primarily using C/C++)] | ||

| + | * [http://androidsocialmedia.com/developments/android-g1-root-access-why-how Android G1 root access: Why and How] | ||

| + | * [http://android-dls.com/wiki/index.php?title=Magic_Root_Access Magic Root Access] | ||

| + | * [http://www.droiddraw.org/ DroidDraw] User Interface (UI) designer/editor for programming the Android Cell Phone Platform | ||

| + | * [http://fyi.oreilly.com/2009/02/setting-up-your-android-develo.html Setting Up Your Android Development Environment] | ||

| + | * [http://marakana.com/forums/android/examples/ Marakana Android Examples] Very Useful | ||

| + | * [http://www.aton.com/porting-applications-using-the-android-ndk/ Porting Applications Using the Android NDK] | ||

| + | * [http://www.linux-mag.com/cache/7697/1.html Writing “C” Code for Android] | ||

| + | * [http://developer.android.com/guide/developing/tools/adb.html Android Debug Bridge] | ||

| + | * [http://www.vogella.de/articles/Android/article.html Android Development Tutorial] | ||

| + | * [http://www.ibm.com/developerworks/opensource/library/os-android-devel/index.html Introduction to Android development] | ||

| + | * [http://www.droidnova.com/android-3d-game-tutorial-part-i,312.html Android 3D Game Tutorial] | ||

| + | * [http://www.ibm.com/developerworks/opensource/library/os-tinycloud/index.html?ca=drs- A tiny cloud in Android] Exploring the Android file system from your browser | ||

| + | |||

| + | * [http://www.pocketmagic.net/?p=682 Android C native development – take full control!] | ||

| + | * [http://benno.id.au/blog/2007/11/13/android-native-apps Native C applications for Android] | ||

| + | * [http://gitorious.org/~olvaffe/ffmpeg/ffmpeg-android ffmpeg-android] | ||

| + | * [http://groups.google.com/group/android-ndk/browse_thread/thread/f25d5c7f519bf0c5/1a57a7d4a53c852a?lnk=raot Thread on compiling ffmpeg on Android] | ||

| + | * [http://oo-androidnews.blogspot.com/2010/02/ffmpeg-and-androidmk.html ffmpeg and Android.mk] | ||

| + | * [http://groups.google.com/group/android-ndk/browse_thread/thread/28520bbd102d0b33?pli=1 Thread about an STL port to Android] | ||

| + | * [http://zakattacktaylor.com/?p=17 The Android Activity and GLSurfaceView from native code] | ||

| + | * [http://nhenze.net/?p=253 Camera image->NDK->OpenGL texture] | ||

| + | * [http://blog.vlad1.com/2010/03/19/things-i-learned-today-android-opengl-edition-2/ Android OpenGL Edition] | ||

| + | |||

| + | '''Note:''' Installing the SDK on a 64-bit distribution on Linux may result in meaningless errors while attempting to run the tools that come with the SDK or the emulator (e.g. an error indicating that directory or file is not found although you are sure it exists). To solve this, some 32-bit libraries/packages which are not installed by default in a 64-bit distribution may need to be installed. This can be achieved easily through the getlibs utility as indicated [http://blog.jayway.com/2009/10/21/getting-android-sdk-working-on-ubuntu-64/ here]. | ||

| + | |||

| + | == MPlayer on Android == | ||

| + | |||

| + | Running mplayer on android emulator: | ||

| + | |||

| + | <pre> | ||

| + | 1. compile mplayer-1.0rc2 | ||

| + | |||

| + | modify vo_fbdev.c:661 /dev/fb0 -> /dev/graphics/fb0 | ||

| + | |||

| + | ./configure --enable-fbdev --host-cc=gcc --target=arm --cc=arm-none-linux-gnueabi-gcc --as=arm-none-linux-gnueabi-as --ar=arm-none-linux-gnueabi-ar --ranlib=arm-none-linux-gnueabi-ranlib --enable-static | ||

| + | |||

| + | 2. upload mplayer with ddms | ||

| + | |||

| + | 3. run mplayer with option -vo fbdev | ||

| + | |||

| + | You'll need a cross-compiler, which is pretty easy to set up on Debian: | ||

| + | http://android-dls.com/wiki/index.php?title=Compiling_for_Android | ||

| + | After doing that, run apt-cross -i zlib1g-dev. | ||

| + | |||

| + | You'll need to change arm-none-linux to arm-linux, and add LDFLAGS=-lz to the configure arguments | ||

| + | </pre> | ||

| + | |||

| + | Other URLs to check: | ||

| + | |||

| + | http://www.talkandroid.com/android-forums/android-applications/3508-android-webkit-plugin-audio-problem.html | ||

| + | |||

| + | http://www.anddev.org/sdl_port_for_android_sdk-ndk_16-t9218.html | ||

| + | |||

| + | http://androidforums.com/android-media/1820-best-media-player-so-far.html | ||

| + | |||

| + | http://www.techjini.com/blog/2009/10/26/android-ndk-an-introduction-how-to-work-with-ndk/ | ||

| + | |||

| + | http://marakana.com/forums/android/android_examples/49.html | ||

| + | |||

| + | http://earlence.blogspot.com/2009/07/writing-applications-using-android-ndk.html | ||

| + | |||

| + | http://developer.android.com/guide/appendix/media-formats.html | ||

| + | |||

| + | http://groups.google.com/group/android-developers/browse_thread/thread/c3eafcddcf5e0511?pli=1 | ||

| + | |||

| + | http://osdir.com/ml/android-porting/2009-09/msg00109.html | ||

| + | |||

| + | |||

| + | Things to look into: | ||

| + | |||

| + | [http://www.packetvideo.com/products/android/index.html PacketVideo's OpenCore Media Framework]. Source tree for OpenCore for Android can be found [http://android.git.kernel.org/?p=platform/external/opencore.git;a=tree here]. | ||

| + | |||

| + | [http://www.freedesktop.org/wiki/GstOpenMAX GstOpenMAX] a GStreamer plug-in that allows communication with [http://www.khronos.org/openmax/ OpenMAX] IL (an industry standard that provides an abstraction layer for computer graphics, video, and sound routines) components. | ||

| + | |||

| + | |||

| + | == Graph Libraries and Tools == | ||

| + | |||

| + | * [http://www.informit.com/articles/article.aspx?p=25777 Article from InformIT on the Boost Graph Library] | ||

| + | * [http://www.drdobbs.com/184402049;jsessionid=CKLCKU3HTU4TVQE1GHOSKH4ATMY32JVN?pgno=1 Dr. Dobb's article on GraphViz and C++] | ||

| + | * [http://www.yworks.com/en/products_yed_applicationfeatures.html yEd Graph Editor] | ||

| + | * [http://code.google.com/p/jrfonseca/wiki/XDot XDot] interactive viewer for Graphviz dot files | ||

| + | * [http://linuxdevcenter.com/pub/a/linux/2004/05/06/graphviz_dot.html?page=1 An Introduction to GraphViz and dot] | ||

| + | * [http://blogs.sun.com/sundararajan/entry/graphs_gxl_dot_and_graphviz Graphs, GXL, dot and Graphviz] | ||

| + | * [http://www.linuxjournal.com/article/7275?page=0,2 An Introduction to GraphViz] | ||

| + | * [http://www.karakas-online.de/forum/viewtopic.php?t=2647 How to use Graphviz to generate complex graphs] | ||

| + | * [http://www.antlr.org/wiki/display/CS652/Parsing+Graphviz+DOT+files+the+hard+way Parsing Graphviz DOT files the hard way] | ||

== '''Peer-to-Peer and SVC''' == | == '''Peer-to-Peer and SVC''' == | ||

| Line 8: | Line 102: | ||

* Overview of the Scalable Video Coding Extension of the H.264/AVC Standard | * Overview of the Scalable Video Coding Extension of the H.264/AVC Standard | ||

* Enabling Adaptive Video Streaming in P2P Systems | * Enabling Adaptive Video Streaming in P2P Systems | ||

| + | |||

| + | ==== References ==== | ||

| + | |||

| + | * J. Chakareski, S. Han, and B. Girod, [http://portal.acm.org/citation.cfm?id=957013.957100 Layered Coding vs. Multiple Descriptions for Video Streaming over Multiple Paths], In Proceedings of the eleventh ACM international conference on Multimedia, 2003. | ||

| + | * J. Monteiro, C. Calafate, and M. Nunes, [http://ieeexplore.ieee.org/xpls/abs_all.jsp?isnumber=4604792&arnumber=4595644&count=23&index=15 Evaluation of the H.264 Scalable Video Coding in Error Prone IP Networks], IEEE Transactions on Broadcasting, 2008. | ||

| + | * H. Schwarz, D. Marpe, and T. Wiegand, [http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?&arnumber=4317636 Overview of the Scalable Video Coding Extension of the H.264/AVC Standard], IEEE Transactions on Circuits and Systems for Video Technology, 2007. | ||

| + | * D. Jurca, J. Chakareski, J.-P. Wagner, and P. Frossard, [http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=4251079 Enabling adaptive video streaming in P2P systems], IEEE Communications Magazine, 2007. | ||

| + | |||

| + | * Z. Liu, Y. Shen, K.W. Ross, S.S. Panwar, Y. Wang, [http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=5208239 LayerP2P: Using Layered Video Chunks in P2P Live Streaming], IEEE Transactions on Multimedia, 2009. | ||

| + | |||

| + | * S. Wenger, Ye-Kui Wang, and T. Schierl, [http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=4317640 Transport and Signaling of SVC in IP Networks], IEEE Transactions on Circuits and Systems for Video Technology, 2007. | ||

| + | |||

| + | * Ye-Kui Wang, M.M. Hannuksela, S. Pateux, A. Eleftheriadis, and S. Wenger, [http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=4106492 System and Transport Interface of H.264/AVC Scalable Extension], IEEE Transactions on Circuits and Systems for Video Technology, 2007. | ||

| + | |||

| + | * S. Wenger, Ye-kui Wang, and M.M. Hannuksela, [http://www.springerlink.com/content/w60g175201226482/ RTP payload format for H.264/SVC scalable video coding], Journal of Zhejiang University - Science A, Vol. 7, No. 5, 2006. | ||

| + | |||

| + | * R. Kuschniga, I. Koflera, M. Ransburga, and H. Hellwagner, [http://dx.doi.org/10.1016/j.jvcir.2008.07.004 Design options and comparison of in-network H.264/SVC adaptation], Journal of Visual Communication and Image Representation, Vol. 19, No. 8, 2008. | ||

| + | |||

| + | * S. Wenger, [http://ieeexplore.ieee.org/search/srchabstract.jsp?arnumber=1218197&isnumber=27384&punumber=76&k2dockey=1218197@ieeejrns&query=%28%28h.264%2Favc+over+ip%29%3Cin%3Emetadata%29&pos=0&access=no H.264/AVC over IP], IEEE Transactions on Circuits and Systems for Video Technology, 2003. | ||

| + | |||

| + | * S. Wenger, M.M. Hannuksela, T. Stockhammer, M. Westerlund, and D. Singer, [http://www.ietf.org/rfc/rfc3984.txt RTP Payload Format for H.264 Video], RFC 3984. | ||

| + | |||

| + | * S. Wenger, Y.-K. Wang (Huawei), T. Schierl (Fraunhofer HHI), and A. Eleftheriadis (Vidyo), [http://tools.ietf.org/id/draft-ietf-avt-rtp-svc-20.txt RTP Payload Format for SVC], Internet-Draft. | ||

| + | |||

| + | * [http://news.bbc.co.uk/2/hi/technology/10393668.stm England match triggers net surge] | ||

| + | |||

| + | |||

| + | ==== Tips ==== | ||

| + | |||

| + | * [http://mailman.videolan.org/pipermail/vlc/2007-March/014366.html Can VLC recognize RTP video stream?] | ||

| + | |||

== '''Long Term Evolution (LTE)''' == | == '''Long Term Evolution (LTE)''' == | ||

| Line 47: | Line 172: | ||

==== Long Term Evolution of 3GPP ==== | ==== Long Term Evolution of 3GPP ==== | ||

| + | |||

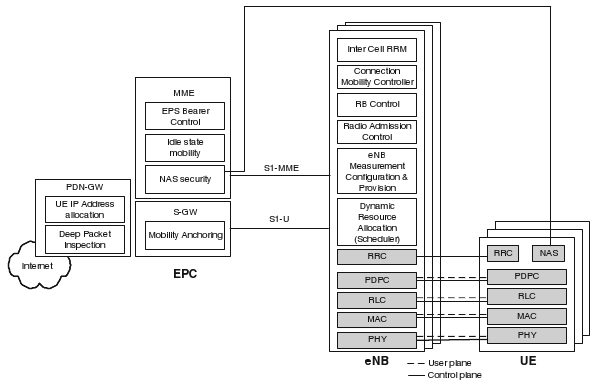

| + | * LTE encompasses the evolution of the radio access through the Evolved-UTRAN (E-UTRAN) | ||

| + | * LTE is accompanied by an evolution of the non-radio aspects under the term System Architecture Evolution (SAE) | ||

| + | * The entire system composed of both LTE and SAE is called the Evolved Packet System (EPS) | ||

| + | * A ''bearer'' is an IP packet flow with a defined QoS between the gateway and the User Terminal (UE) | ||

| + | * At a high-level the network is comprised of the Core Network (CN), called Evolved Packet Core (EPC) in SAE, and the access network (E-UTRAN) | ||

| + | * CN is responsible for overall control of UE and establishment of the bearers | ||

| + | * Main logical nodes in EPC are: | ||

| + | ** PDN Gateway (P-GW) | ||

| + | ** Serving Gateway (S-GW) | ||

| + | ** Mobility Management Entity (MME) | ||

| + | * EPC also includes other nodes and functions, such as the Home Subscriber Server (HSS) and the Policy Control and Charging Rules Function (PCRF) | ||

| + | * EPS only provides a bearer path of a certain QoS, control of multimedia applications is provided by the IP Multimedia Subsystem (IMS), which considered outside of EPS | ||

| + | * E-UTRAN solely contains the evolved base stations, called ''eNodeB'' or ''eNB'' | ||

| + | |||

| + | [[Image:LTE_Feature_Distribution.png|LTE Feature Distribution|frame|center|500px]] | ||

===== LTE PHY Layer ===== | ===== LTE PHY Layer ===== | ||

| Line 100: | Line 241: | ||

==== LTE Radio Interface Architecture ==== | ==== LTE Radio Interface Architecture ==== | ||

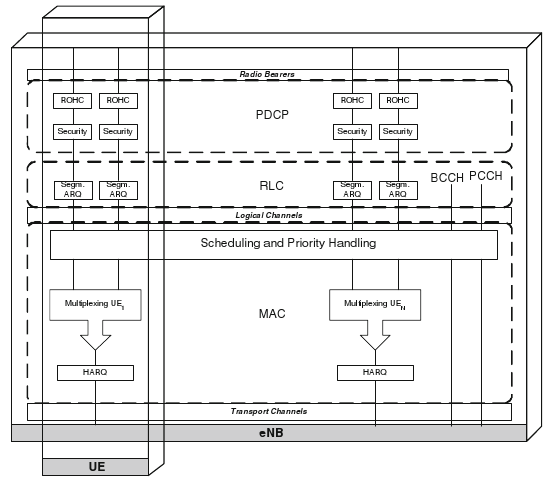

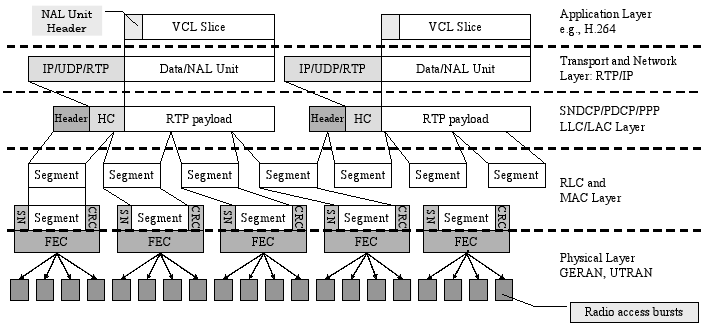

| + | * There are control plane and data plane protocol layers in eNB and UE | ||

* Data to be transmitted in the downlink enters the processing chain in the form of IP packets on one of the ''SAE bearers'' | * Data to be transmitted in the downlink enters the processing chain in the form of IP packets on one of the ''SAE bearers'' | ||

* IP packets are passed through multiple protocol entities: | * IP packets are passed through multiple protocol entities: | ||

| Line 109: | Line 251: | ||

** ''Physical Layer (PHY)'' handles coding/decoding, modulation/demodulation, multi-antenna mapping, and other typical physical layer functions | ** ''Physical Layer (PHY)'' handles coding/decoding, modulation/demodulation, multi-antenna mapping, and other typical physical layer functions | ||

*** offers services to the MAC layer in the form of ''transport channels'' | *** offers services to the MAC layer in the form of ''transport channels'' | ||

| + | |||

| + | [[Image:LTE_LayersChannels.png|Layer and Channel Structure for UE and eNB|frame|center]] | ||

===== RLC ===== | ===== RLC ===== | ||

| Line 149: | Line 293: | ||

* The resources, as well as the transport-block size and the modulation scheme, are under control of the scheduler (of the MAC layer) | * The resources, as well as the transport-block size and the modulation scheme, are under control of the scheduler (of the MAC layer) | ||

* For the broadcast of system information on the BCH, a mobile terminal must be able to receive this information channel as one of the first steps prior to accessing the system. Consequently, the transmission format must be known to the terminals a priori and there is no dynamic control of any of the transmission parameters from the MAC layer in this case | * For the broadcast of system information on the BCH, a mobile terminal must be able to receive this information channel as one of the first steps prior to accessing the system. Consequently, the transmission format must be known to the terminals a priori and there is no dynamic control of any of the transmission parameters from the MAC layer in this case | ||

| + | |||

| + | [[Image:LTE_PHY_Interaction1.png|LTE Physical Layer Interaction|frame|center]] | ||

| Line 219: | Line 365: | ||

===== Video Streaming over MBMS: A System Design Approach ===== | ===== Video Streaming over MBMS: A System Design Approach ===== | ||

| + | |||

| + | * Introduces and analyzes the main system design parameters that influence the performance of H.264 streaming over EGPRS and UMTS bearers | ||

| + | * Investigates the application of an advanced receiver concept (permeable layer receiver) in MBMS video broadcasting environments | ||

| + | * Presents RealNeS-MBMS, a real-time testbed for MBMS | ||

| + | |||

===== Scalable and Media Aware Adaptive Video Streaming over Wireless Networks ===== | ===== Scalable and Media Aware Adaptive Video Streaming over Wireless Networks ===== | ||

| Line 228: | Line 379: | ||

** do not maintain fairness if deadline is expected to be violated, packets with lower priorities are delayed in a first time and later dropped if necessary | ** do not maintain fairness if deadline is expected to be violated, packets with lower priorities are delayed in a first time and later dropped if necessary | ||

* In addition, SVC coding is tuned, leading to a generalized scalability scheme including regions of interest (ROI) (combining ROI coding with SNR and temporal scalability provides a wide range of possible bitstream partitions) | * In addition, SVC coding is tuned, leading to a generalized scalability scheme including regions of interest (ROI) (combining ROI coding with SNR and temporal scalability provides a wide range of possible bitstream partitions) | ||

| + | |||

==== References ==== | ==== References ==== | ||

| Line 240: | Line 392: | ||

* X. Ji, J. Huang, M. Chiang, and F. Catthoor, [http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=4533512 Downlink OFDM scheduling and resource allocation for delay constrained SVC streaming] In Proceedings of the IEEE International Conference on Communications (ICC), 2008. | * X. Ji, J. Huang, M. Chiang, and F. Catthoor, [http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=4533512 Downlink OFDM scheduling and resource allocation for delay constrained SVC streaming] In Proceedings of the IEEE International Conference on Communications (ICC), 2008. | ||

* J. Afzal, T. Stockhammer, T. Gasiba, and W. Xu, [http://www.academypublisher.com/jmm/vol01/no05/jmm01052535.pdf Video Streaming over MBMS: A System Design Approach], Journal of Multimedia, vol. 1, no. 5, pp. 25-35, 2006. | * J. Afzal, T. Stockhammer, T. Gasiba, and W. Xu, [http://www.academypublisher.com/jmm/vol01/no05/jmm01052535.pdf Video Streaming over MBMS: A System Design Approach], Journal of Multimedia, vol. 1, no. 5, pp. 25-35, 2006. | ||

| − | + | * [http://news.bbc.co.uk/2/hi/programmes/click_online/9118220.stm Has South Korea lost its lead with mobile tech?] From BBC Click | |

==== Tools ==== | ==== Tools ==== | ||

Latest revision as of 00:09, 24 October 2010

Will summarize the readings here..

Android Development

- Androidx86 An x86 port of Android

- LiveAndroid A LiveCD of Android

- 0xdroid is a community-developed Android distribution by 0xlab (Note: it has a port of SDL to Android)

- AirPlay SDK for iPhone and Android

- Native application development for Android (primarily using C/C++)

- Android G1 root access: Why and How

- Magic Root Access

- DroidDraw User Interface (UI) designer/editor for programming the Android Cell Phone Platform

- Setting Up Your Android Development Environment

- Marakana Android Examples Very Useful

- Porting Applications Using the Android NDK

- Writing “C” Code for Android

- Android Debug Bridge

- Android Development Tutorial

- Introduction to Android development

- Android 3D Game Tutorial

- A tiny cloud in Android Exploring the Android file system from your browser

- Android C native development – take full control!

- Native C applications for Android

- ffmpeg-android

- Thread on compiling ffmpeg on Android

- ffmpeg and Android.mk

- Thread about an STL port to Android

- The Android Activity and GLSurfaceView from native code

- Camera image->NDK->OpenGL texture

- Android OpenGL Edition

Note: Installing the SDK on a 64-bit distribution on Linux may result in meaningless errors while attempting to run the tools that come with the SDK or the emulator (e.g. an error indicating that directory or file is not found although you are sure it exists). To solve this, some 32-bit libraries/packages which are not installed by default in a 64-bit distribution may need to be installed. This can be achieved easily through the getlibs utility as indicated here.

MPlayer on Android

Running mplayer on android emulator:

1. compile mplayer-1.0rc2 modify vo_fbdev.c:661 /dev/fb0 -> /dev/graphics/fb0 ./configure --enable-fbdev --host-cc=gcc --target=arm --cc=arm-none-linux-gnueabi-gcc --as=arm-none-linux-gnueabi-as --ar=arm-none-linux-gnueabi-ar --ranlib=arm-none-linux-gnueabi-ranlib --enable-static 2. upload mplayer with ddms 3. run mplayer with option -vo fbdev You'll need a cross-compiler, which is pretty easy to set up on Debian: http://android-dls.com/wiki/index.php?title=Compiling_for_Android After doing that, run apt-cross -i zlib1g-dev. You'll need to change arm-none-linux to arm-linux, and add LDFLAGS=-lz to the configure arguments

Other URLs to check:

http://www.anddev.org/sdl_port_for_android_sdk-ndk_16-t9218.html

http://androidforums.com/android-media/1820-best-media-player-so-far.html

http://www.techjini.com/blog/2009/10/26/android-ndk-an-introduction-how-to-work-with-ndk/

http://marakana.com/forums/android/android_examples/49.html

http://earlence.blogspot.com/2009/07/writing-applications-using-android-ndk.html

http://developer.android.com/guide/appendix/media-formats.html

http://groups.google.com/group/android-developers/browse_thread/thread/c3eafcddcf5e0511?pli=1

http://osdir.com/ml/android-porting/2009-09/msg00109.html

Things to look into:

PacketVideo's OpenCore Media Framework. Source tree for OpenCore for Android can be found here.

GstOpenMAX a GStreamer plug-in that allows communication with OpenMAX IL (an industry standard that provides an abstraction layer for computer graphics, video, and sound routines) components.

Graph Libraries and Tools

- Article from InformIT on the Boost Graph Library

- Dr. Dobb's article on GraphViz and C++

- yEd Graph Editor

- XDot interactive viewer for Graphviz dot files

- An Introduction to GraphViz and dot

- Graphs, GXL, dot and Graphviz

- An Introduction to GraphViz

- How to use Graphviz to generate complex graphs

- Parsing Graphviz DOT files the hard way

Peer-to-Peer and SVC

- Peer-Driven Video Streaming: Multiple Descriptions versus Layering

- Layered Coding vs. Multiple Descriptions for Video Streaming over Multiple Paths

- Evaluation of the H.264 Scalable Video Coding in Error Prone IP Networks

- Overview of the Scalable Video Coding Extension of the H.264/AVC Standard

- Enabling Adaptive Video Streaming in P2P Systems

References

- J. Chakareski, S. Han, and B. Girod, Layered Coding vs. Multiple Descriptions for Video Streaming over Multiple Paths, In Proceedings of the eleventh ACM international conference on Multimedia, 2003.

- J. Monteiro, C. Calafate, and M. Nunes, Evaluation of the H.264 Scalable Video Coding in Error Prone IP Networks, IEEE Transactions on Broadcasting, 2008.

- H. Schwarz, D. Marpe, and T. Wiegand, Overview of the Scalable Video Coding Extension of the H.264/AVC Standard, IEEE Transactions on Circuits and Systems for Video Technology, 2007.

- D. Jurca, J. Chakareski, J.-P. Wagner, and P. Frossard, Enabling adaptive video streaming in P2P systems, IEEE Communications Magazine, 2007.

- Z. Liu, Y. Shen, K.W. Ross, S.S. Panwar, Y. Wang, LayerP2P: Using Layered Video Chunks in P2P Live Streaming, IEEE Transactions on Multimedia, 2009.

- S. Wenger, Ye-Kui Wang, and T. Schierl, Transport and Signaling of SVC in IP Networks, IEEE Transactions on Circuits and Systems for Video Technology, 2007.

- Ye-Kui Wang, M.M. Hannuksela, S. Pateux, A. Eleftheriadis, and S. Wenger, System and Transport Interface of H.264/AVC Scalable Extension, IEEE Transactions on Circuits and Systems for Video Technology, 2007.

- S. Wenger, Ye-kui Wang, and M.M. Hannuksela, RTP payload format for H.264/SVC scalable video coding, Journal of Zhejiang University - Science A, Vol. 7, No. 5, 2006.

- R. Kuschniga, I. Koflera, M. Ransburga, and H. Hellwagner, Design options and comparison of in-network H.264/SVC adaptation, Journal of Visual Communication and Image Representation, Vol. 19, No. 8, 2008.

- S. Wenger, H.264/AVC over IP, IEEE Transactions on Circuits and Systems for Video Technology, 2003.

- S. Wenger, M.M. Hannuksela, T. Stockhammer, M. Westerlund, and D. Singer, RTP Payload Format for H.264 Video, RFC 3984.

- S. Wenger, Y.-K. Wang (Huawei), T. Schierl (Fraunhofer HHI), and A. Eleftheriadis (Vidyo), RTP Payload Format for SVC, Internet-Draft.

Tips

Long Term Evolution (LTE)

- Mobile Video Transmission Using Scalable Video Coding

- LTE - An Introduction

- Optimal Transmission Scheduling for Scalable Wireless Video Broadcast with Rateless Erasure Correction Code

- Dynamic Session Control for Scalable Video Coding over IMS

- Scheduling and Resource Allocation for SVC Streaming over OFDM Downlink Systems

- Mobile Broadband: Including WiMAX and LTE

- Chapter-11: Long Term Evolution of 3GPP

- 3G Evolution HSPA and LTE for Mobile Broadband

- Chapter-11: MBMS: Multimedia Broadcast Multicast Service

- The UMTS Long Term Evolution: From Theory to Practice

- Chapter-2: Network Architecture

- Chapter-14: Broadcast Operation

Acronyms

- 3GPP 3rd Generation Partnership Project

- BM-SC Broadcast Multicast Service Centre

- CN Core Network

- CP Cyclic Prefix

- EPC Evolved Packet Core

- EPS Evolved Packet System

- ICI InterCell Interference

- LTE Long Term Evolution

- MANE Media-Aware Network Element

- MBMS Multimedia Broadcast Multicast Service

- MIMO Multiple Input Multiple Output

- OFDM Orthogonal Frequency Division Multiplexing

- OFDMA Orthogonal Frequency Division Multiple Access

- SAE System Architecture Evolution

- SC-FDMA Single Carrier Frequency Division Multiple Access

- TTI Transmission Time Interval

- UE User Equipment

- UTRAN UMTS Terrestrial Radio Access Network

Long Term Evolution of 3GPP

- LTE encompasses the evolution of the radio access through the Evolved-UTRAN (E-UTRAN)

- LTE is accompanied by an evolution of the non-radio aspects under the term System Architecture Evolution (SAE)

- The entire system composed of both LTE and SAE is called the Evolved Packet System (EPS)

- A bearer is an IP packet flow with a defined QoS between the gateway and the User Terminal (UE)

- At a high-level the network is comprised of the Core Network (CN), called Evolved Packet Core (EPC) in SAE, and the access network (E-UTRAN)

- CN is responsible for overall control of UE and establishment of the bearers

- Main logical nodes in EPC are:

- PDN Gateway (P-GW)

- Serving Gateway (S-GW)

- Mobility Management Entity (MME)

- EPC also includes other nodes and functions, such as the Home Subscriber Server (HSS) and the Policy Control and Charging Rules Function (PCRF)

- EPS only provides a bearer path of a certain QoS, control of multimedia applications is provided by the IP Multimedia Subsystem (IMS), which considered outside of EPS

- E-UTRAN solely contains the evolved base stations, called eNodeB or eNB

LTE PHY Layer

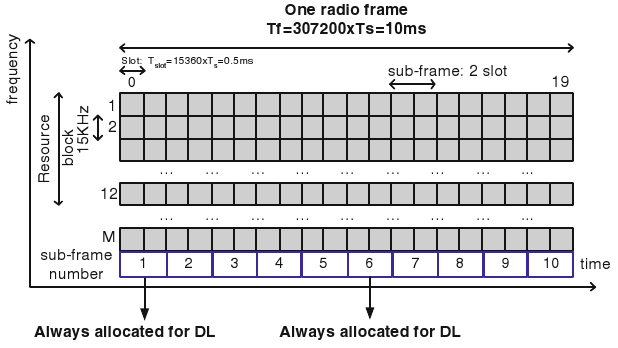

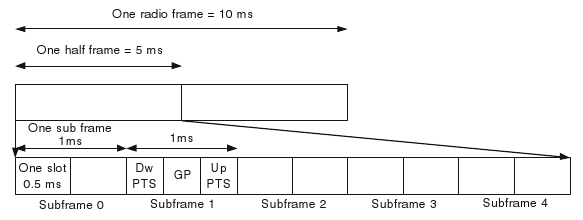

- Based on OFDMA with cyclic prefix in downlink, and on SC-FDMA with a cyclic prefix in the uplink

- Three duplexing modes are supported: full duplex FDD, half duplex FDD, and TDD

- Two frame structure types:

- Type-1 shared by both full- and half-duplex FDD

- Type-2 applicable to TDD

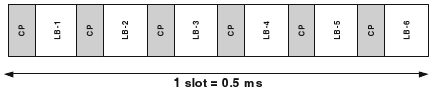

- Type-1 radio frame has a length of 10 ms and contains 20 slots (slot duration is 0.5 ms)

- Two adjacent slots constitute a subframe of length 1 ms

- Supported modulation schemes are: QPSK, 16QAM, 64QAM

- Broadcast channel only uses QPSK

- Maximum information block size = 6144 bits

- CRC-24 used for error detection

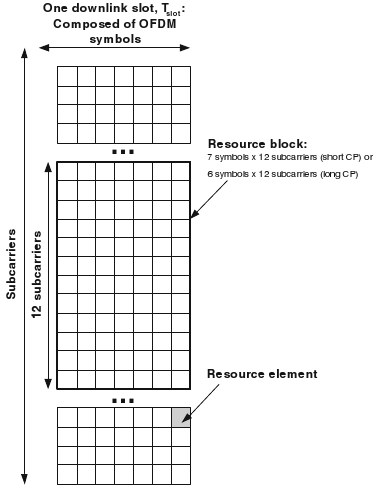

OFDMA Downlink

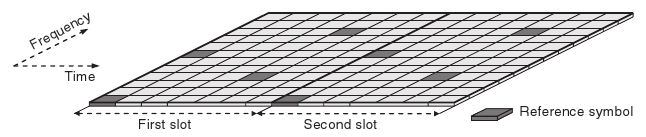

- Scheduler in eNB (base station) allocates resource blocks (which are the smallest elements of resource allocation) to users for predetermined amount of time

- Slots consist of either 6 (for long cyclic prefix) or 7 (for short cyclic prefix) OFDM symbols

- Longer cyclic prefixes are desired to address longer fading

- Number of available subcarriers changes depending on transmission bandwidth (but subcarrier spacing is fixed)

- To enable channel estimation in OFDM transmission, known reference symbols are inserted into the OFDM time-frequency grid. In LTE, these reference symbols are jointly referred to as downlink reference signals.

- Three types of reference signals are defined for the LTE downlink:

- Cell-specific downlink reference signals

- transmitted in every downlink subframe, and span the entire downlink cell bandwidth.

- UE-specific reference signal

- only transmitted within the resource blocks assigned for DL-SCH transmission to that specific terminal

- MBSFN reference signals

- Cell-specific downlink reference signals

LTE MAC Layer

- eNB scheduler controls the time/frequency resources for a given time for uplink and downlink

- Scheduler dynamically allocates resources to UEs at each Transmission Time Interval (TTI)

- Depending on channel conditions, scheduler selects best multiplexing for UE

- Downlink LTE considers the following schemes as a scheduler algorithm:

- Frequency Selective Scheduling (FSS)

- Frequency Diverse Scheduling (FDS)

- Proportional Fair Scheduling (PFS)

- Link adaptation is performed through adaptive modulation and coding

LTE Radio Interface Architecture

- There are control plane and data plane protocol layers in eNB and UE

- Data to be transmitted in the downlink enters the processing chain in the form of IP packets on one of the SAE bearers

- IP packets are passed through multiple protocol entities:

- Packet Data Convergence Protocol (PDCP) performs IP header compression, to reduce the number of bits to transmit over the radio interface, based on Robust Header Compression (ROHC) in addition to ciphering and integrity protection of the transmitted data

- Radio Link Control (RLC) is responsible for segmentation/concatenation, retransmission handling, and in-sequence delivery to higher layers

- offers services to the PDCP in the form of radio bearers

- Medium Access Control (MAC) handles hybrid-ARQ retransmissions and uplink and downlink scheduling at the eNodeB

- offers services to the RLC in the form of logical channels

- Physical Layer (PHY) handles coding/decoding, modulation/demodulation, multi-antenna mapping, and other typical physical layer functions

- offers services to the MAC layer in the form of transport channels

RLC

- Depending on the scheduler decision, a certain amount of data is selected for transmission from the RLC SDU buffer and the SDUs are segmented/concatenated to create the RLC PDU. Thus, for LTE the RLC PDU size varies dynamically

- In each RLC PDU, a header is included, containing, among other things, a sequence number used for in-sequence delivery and by the retransmission mechanism

- A retransmission protocol operates between the RLC entities in the receiver and transmitter. By monitoring the sequence numbers of the incoming PDUs, the receiving RLC can identify missing PDUs

- Although the RLC is capable of handling transmission errors due to noise, unpredictable channel variations, etc., error-free delivery is in most cases handled by the MAC-based hybrid-ARQ protocol

MAC

- A logical channel is defined by the type of information it carries and is generally classified as:

- a control channel, used for transmission of control and configuration information necessary for operating an LTE system

- a traffic channel, used for the user data

- A transport channel is defined by how and with what characteristics the information is transmitted over the radio interface

- Data on a transport channel is organized into transport blocks. In each Transmission Time Interval (TTI), at most one transport block of a certain size is transmitted over the radio interface to/from a mobile terminal in absence of spatial multiplexing

- Associated with each transport block is a Transport Format (TF), specifying how the transport block is to be transmitted over the radio interface (it includes information such as transport-block size, the modulation scheme, and the antenna mapping)

- By varying the transport format, the MAC layer can realize different data rates. Rate control is therefore also known as transport-format selection

- In LTE, radio access is shared-channel transmission, that is time–frequency resources are dynamically shared between users. The scheduler is part of the MAC layer and controls the assignment of uplink and downlink resources

- The downlink scheduler (better viewed as a separate entity although part of the MAC layer) is responsible for dynamically controlling the terminal(s) to transmit to and, for each of these terminals, the set of resource blocks upon which the terminal’s DL-SCH should be transmitted

- The scheduling strategy is implementation specific and not specified by 3GPP

- the overall goal of most schedulers is to take advantage of the channel variations between mobile terminals and preferably schedule transmissions to a mobile terminal on resources with advantageous channel condition

- Most scheduling strategies need information about:

- channel conditions at the terminal

- buffer status and priorities of the different data flows

- interference situation in neighboring cells (if some form of interference coordination is implemented)

- Note that in addition to time domain scheduling, LTE also enables channel-dependent scheduling in the frequency domain

- The mobile terminal transmits channel-status reports reflecting the instantaneous channel quality in the time and frequency domains, in addition to information necessary to determine the appropriate antenna processing in case of spatial multiplexing

- Interference coordination, which tries to control the inter-cell interference on a slow basis, is also part of the scheduler

- Hybrid ARQ is not applicable for all types of traffic (broadcast transmissions typically do not rely on hybrid ARQ). Hence, hybrid ARQ is only supported for the DL-SCH and the UL-SCH

- In hybrid ARQ, multiple parallel stop-and-wait processes are used (this can result in data being delivered from the hybrid-ARQ mechanism out-of-sequence, in-sequence delivery is ensured by the RLC layer)

Physical Layer

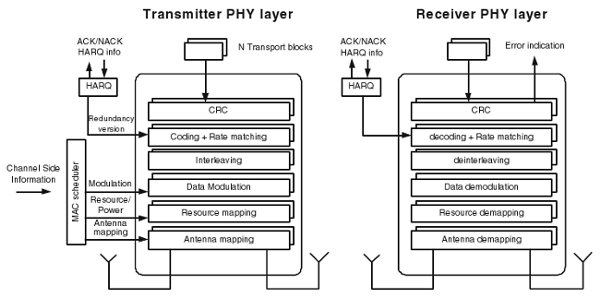

- In the downlink, the DL-SCH is the main channel for data transmission, but the processing for PCH and MCH is similar

- A CRC, used for error detection in the receiver, is attached, followed by Turbo coding for error correction

- Rate matching is used not only to match the number of coded bits to the amount of resources allocated for the DL-SCH transmission, but also to generate the different redundancy versions as controlled by the hybrid-ARQ protocol

- After rate matching, the coded bits are modulated using QPSK, 16QAM, or 64QAM, followed by antenna mapping

- The output of the antenna processing is mapped to the physical resources used for the DL-SCH

- The resources, as well as the transport-block size and the modulation scheme, are under control of the scheduler (of the MAC layer)

- For the broadcast of system information on the BCH, a mobile terminal must be able to receive this information channel as one of the first steps prior to accessing the system. Consequently, the transmission format must be known to the terminals a priori and there is no dynamic control of any of the transmission parameters from the MAC layer in this case

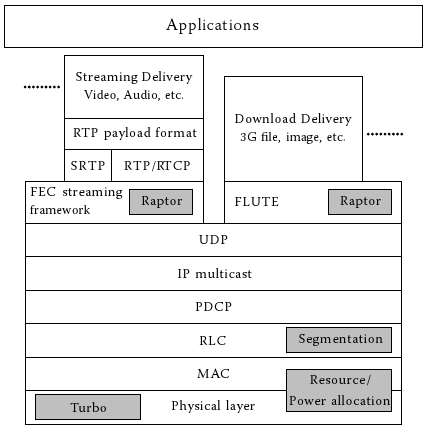

MBMS

- Introduced for WCDMA (UMTS) in Release 6

- Supports multicast/broadcast services in a cellular system

- Same content is transmitted to multiple users located in a specific area (MBMS service area) in a unidirectional fashion

- MBMS extends existing 3GPP architecture by introducing:

- MBMS Bearer Service delivers IP multicast datagrams to multiple receivers using minimum radio and network resources and provides an efficient and scalable means to distribute multimedia content to mobile phones

- MBMS User Services

- streaming services - a continuous data flow of audio and/or video is delivered to the user's handset

- download services - data for the file is delivered in a scheduled transmission timeslot

- The p-t-m MBMS Bearer Service does neither allow control, mode adaptation, nor retransmitting lost radio packets (thus, QoS provided for transport of multimedia applications is in general not sufficiently high to support a significant portion of the users for either download or streaming applications)

- Consequently, 3GPP included an application layer FEC based on Raptor codes for MBMS

- MBMS User Services may be distributed over p-t-p links if decided to be more efficient

- The Broadcast Multicast Service Center (BM-SC) node is responsible for authorization and authentication of content provider, charging, and overall data flow through Core Network (CN)

- In case of multicast, a request to join the session has to be sent to become member of the corresponding MBMS service group

- In contrast to previous releases of Universal Terrestrial Radio Access Network (UTRAN), in MBMS a data stream intended for multiple users is not split until necessary (in UTRAN, one stream per user existed both within CN and RAN)

- MBMS services are power limited and maximize the diversity without relying on feedback from users

- Two techniques are used to provide diversity:

- Macro-diversity: combining transmission from multiple cells

- Soft combining: combines the soft bits received from the different radio links prior to (Turbo) coding

- Selection combining: decoding the signal received from each cell individually, and for each TTI selects one (if any) of the correctly decoded data blocks for further processing by higher layers

- Time-diversity: against fast fading through a long Transmission Time Interval (TTI) and application-level coding

- because broadcast cannot rely on feedback (feedback links are not available for p-t-m bearers on the radio access network), MBMS uses application-level forward error-correcting coding, namely Systematic Raptor codes

- Macro-diversity: combining transmission from multiple cells

- Streaming data are encapsulated in RTP and transported using the FLUTE protocol when delivering over MBMS bearers

- MAC layer maps and multiplexes the RLC-PDUs to the transport channel and selects the transport format depending on the instantaneous source rate

- MBMS uses the Multimedia Traffic Channel (MTCH), which enables p-t-m distribution. This channel is mapped to the Forward Access Channel (FACH), which is finally mapped to the Secondary-Common Control Physical Channel (S-CCPCH)

- The TTI is transport channel specific and can be selected from the set {10 ms, 20 ms, 40 ms, 80 ms} for MBMS

Evolved Multicast Broadcast Multimedia Services (eMBMS)

- Is a multimedia service performed either with a single-cell broadcast or multicell mode (aka MBMS Single Frequency Network (MBSFN))

- In an MBSFN area, all eNBs are synchronized to perform sumulcast transmission from multiple cells (each cell transmitting identical waveform)

- There are three types of cells within an MBSFN area: transmitting/receiving, transmitting only, and reserved

- If user is close to a base station, delay of arrival between two cells could be quite large, so the subcarrier spacing is reduced to 7.5 KHz and longer CP is used

Related Research Papers Summaries

Mobile Video Transmission Using Scalable Video Coding

- In current system architectures, the differentiation of data is very coarse (each flow is only differentiated among four classes: conversational, streaming, interactive, and background). Individual packets within each flow are all treated the same.

- Investigating per packet QoS would enable general packet marking strategies (such as Differentiated Services). This can be done by either:

- Mapping SVC priority information to Differentiated Services Code Point (DSCP) to introduce per packet QoS

- Making the scheduler media-aware (e.g. by including some MANE-like functinality), and therefore able to use priority information in the SVC NAL unit header

- Many live-media distribution protocols are based on RTP, including p-t-m transmission (e.g. DVB-H or MBMS). Provision of different layers, on different multicast addresses for example, allows for applying protection strength on different layers

- By providing signalling in the RTP payload header as well as in the SDP session signalling, adaptation (for bitrate or device capability) can be applied in the network by nodes typically known as MANE

Downlink OFDM Scheduling and Resource Allocation for Delay Constrained SVC Streaming

- Problem Definition:

- Designing an efficient multi-user video streaming protocols that fully exploit the resource allocation flexibility in OFDM and performance scalabilities in SVC

- Maximize average PSNR for all video users under a total downlink transmission power constraint based on a stochastic subgradient-based scheduling framework

- Authors generalize their previous downlink OFDM resource allocation algorithm for elastic data traffic to real-time video streaming by further considering dynamically adjusted priority weights based on the current video content, deadline requirements, and the previous transmission results

- Main steps:

- Divide video data into subflows based on the contribution of distortion decrease and the delay requirements of individual video frames

- Calculate the weights of current subflows according to their rate-distortion properties, playback deadline requirements, and the previous transmission results

- Use a rate-distortion weighted transmission scheduling strategy, based on the existing gradient related approach

- To overcome the deadline approaching effect, deliberately add a product to the weight calculation which increases when the deadline approaches

Video Streaming over MBMS: A System Design Approach

- Introduces and analyzes the main system design parameters that influence the performance of H.264 streaming over EGPRS and UMTS bearers

- Investigates the application of an advanced receiver concept (permeable layer receiver) in MBMS video broadcasting environments

- Presents RealNeS-MBMS, a real-time testbed for MBMS

Scalable and Media Aware Adaptive Video Streaming over Wireless Networks

- Introduces a packet scheduling algorithm which operates on the different substreams of the main scalable video stream which is implemented in a MANE

- Exploit SVC coding to provide a subset of hierarchically organized substreams at the RLC layer entry point and utilize the scheduling algorithm to select scalable substreams to be transmitted to RCL layer depending on the channel transmission conditions

- General idea:

- perform fair scheduling between scalable substreams until deadline of oldest unsent data units with higher priorities is approaching

- do not maintain fairness if deadline is expected to be violated, packets with lower priorities are delayed in a first time and later dropped if necessary

- In addition, SVC coding is tuned, leading to a generalized scalability scheme including regions of interest (ROI) (combining ROI coding with SNR and temporal scalability provides a wide range of possible bitstream partitions)

References

- LTE - An Introduction, Ericsson White Paper, 2009.

- E. Dahlman, S. Parkvall, J. Schold, and P. Beming, 3G Evolution: HSPA and LTE for Mobile Broadband, 2nd Edition, Academic Press, 2008.

- M. Ergen, Mobile Broadband: Including WiMAX and LTE, Springer, 2009.

- S. Sesia, I. Toufik, and M. Baker, LTE - The UMTS Long Term Evolution: From Theory to Practice, John Wiley & Sons, 2009.

- G. Liebl, T. Schierl, T. Wiegand, and T. Stockhammer, Advanced Wireless Multiuser Video Streaming using the Scalable Video Coding Extensions of H.264/MPEG-4-AVC, In Proceedings of IEEE International Conference on Multimedia and Expo (ICME), 2006.

- N. Tizon and B. Pesquet-Popescu, Scalable and Media Aware Adaptive Video Streaming over Wireless Networks, EURASIP Journal on Advances in Signal Processing, 2008.

- T. Schierl, T. Stockhammer, and T. Wiegand, Mobile Video Transmission Using Scalable Video Coding, IEEE Transactions on Circuits and Systems for Video Technology, vol.17, no.9, pp.1204-1217, 2007.

- X. Ji, J. Huang, M. Chiang, and F. Catthoor, Downlink OFDM scheduling and resource allocation for delay constrained SVC streaming In Proceedings of the IEEE International Conference on Communications (ICC), 2008.

- J. Afzal, T. Stockhammer, T. Gasiba, and W. Xu, Video Streaming over MBMS: A System Design Approach, Journal of Multimedia, vol. 1, no. 5, pp. 25-35, 2006.

- Has South Korea lost its lead with mobile tech? From BBC Click

Tools

- Nomor Research GmbH provides a Network Emulator for Application Testing solution, addition to LTE Protocol Stack Library and LTE eNB Emulator

- IXIA IxCatapult High-Capacity LTE UE Simulation

- IXIA IxCatapult LTE Testing solution

- Anritsu MD8430A LTE Base Station Simulator

- B-BONE Project MBMS System Level Simulator for OPNET