Difference between revisions of "2D to 3D Video Conversion"

| (28 intermediate revisions by the same user not shown) | |||

| Line 8: | Line 8: | ||

== Details == | == Details == | ||

| + | |||

| + | The figure below shows an overview of our conversion system. We infer depth from a database of synthetically generated high-quality depths, collected | ||

| + | from video games. We then perform the conversion by transferring the depth gradient field from the database and reconstructing depth using Poisson reconstruction. In order to maintain sharp and accurate object boundaries, we create object masks and modify the Poisson equation on object boundaries. Finally, using the 2D frames and their estimated depth the left and right stereo pairs are rendered using a stereo-warping technique. | ||

| + | |||

<center> | <center> | ||

{| border="0" | {| border="0" | ||

|[[Image:Overview_N.png|center|The proposed 2D-to-3D conversion system|300px]] | |[[Image:Overview_N.png|center|The proposed 2D-to-3D conversion system|300px]] | ||

| + | |- | ||

| + | |align="center" width="200pt"|The proposed 2D-to-3D conversion system | ||

| + | |} | ||

| + | </center> | ||

| + | |||

| + | |||

| + | The main components of of our depth gradient based conversion technique are as follows: | ||

| + | |||

| + | * ''' Visual Search: ''' For each frame of the query video we identify the K most similar frames to it based on GIST and color. | ||

| − | |align="center" width="200pt"|The | + | * ''' Block-based Matching: ''' Using the K candidate frames we construct a matching image which is similar to the query frame and provides a mapping between the candidates and the query frame. To construct this matching image, we divide the query frame into small blocks and compare each block against all possible blocks in the K candidates. The block with the smallest Euclidean distance is chosen as the corresponding block. |

| + | |||

| + | * ''' Poisson Reconstruction: ''' We copy the corresponding depth gradients from the matched image to the query frame and reconstruct the depth values from the copied depth gradients using the Poisson equation. | ||

| + | |||

| + | * ''' Object Boundary Cuts: ''' In order to maintain sharp and accurate object boundaries, we create object masks, detect their edges through Canny edge detector, and disconnect pixels from the object boundaries by not allowing them to use an object boundary pixel as a valid neighbor. | ||

| + | |||

| + | * ''' Smoothing: ''' We add smoothness constraints to the Poisson reconstruction by enforcing the higher-order depth derivatives to be zero. | ||

| + | |||

| + | |||

| + | <center> | ||

| + | {| border="0" | ||

| + | |[[Image:DepthEstimation_N.png|center|The main components of our depth gradient based conversion|800px]] | ||

| + | |- | ||

| + | |align="center" width="200pt"|The main components of our depth gradient based conversion | ||

| + | |} | ||

| + | </center> | ||

| + | |||

| + | |||

| + | |||

| + | <center> | ||

| + | {| border="0" | ||

| + | |[[Image:Depth_Phases_NN.jpg|center|Depth_Phases|800px]] | ||

| + | |- | ||

| + | |align="left" width="200pt"|The effect of each step in our depth estimation technique: (a) Query, (b) A subset of its K candidates, (c) Created matched image, (d) Object boundary cuts, (e) Depth estimation using Poisson reconstruction, (f) Gradient refinement and Poisson reconstruction, (g) Depth with object boundary cuts, (h) Final depth estimation with smoothness, and (i) The zoomed and amplified version of the yellow block in h. | ||

| + | |} | ||

| + | </center> | ||

| + | |||

| + | |||

| + | The following figure shows some results of our depth estimation technique. Note how we can handle a wide variety of video shots, including different camera | ||

| + | views. | ||

| + | |||

| + | <center> | ||

| + | {| border="0" | ||

| + | |[[Image:DGCSoccer2.jpg|center|Results|800px]] | ||

| + | |- | ||

| + | |align="center" width="200pt"|Depth estimation for a wide variety of soccer shots using our method | ||

|} | |} | ||

</center> | </center> | ||

| Line 19: | Line 67: | ||

== Publications == | == Publications == | ||

| − | K. Calagari, M. Elgharib, P. Didyk, A. Kaspar, W. Matusik, and M. Hefeeda, “Gradient-based 2D-to-3D Conversion for Soccer Videos”, In Proc. of the ACM Multimedia (MM’15), p 331-340, 2015. | + | K. Calagari, M. Elgharib, P. Didyk, A. Kaspar, W. Matusik, and M. Hefeeda, “Gradient-based 2D-to-3D Conversion for Soccer Videos”, In Proc. of the ACM Multimedia conference (MM’15), p 331-340, October 2015. |

| + | |||

| + | K. Calagari, M. Elgharib, P. Didyk, A. Kaspar, W. Matusik, and M. Hefeeda, "Data Driven 2-D-to-3-D Video Conversion for Soccer", IEEE Transactions on Multimedia (TMM), Vol. 20, Issue 3, p 605-619, 2018 | ||

Latest revision as of 11:43, 21 February 2018

People

- Kiana Calagari

- Mohamed Hefeeda

Abstract

A wide spread adoption of 3D displays is hindered by the lack of content that matches the user expectations. Producing 3D videos is far more costly and time-consuming than regular 2D videos, which makes it challenging and thus rarely attempted, especially for live events, such as soccer games. In this project we develop a high-quality automated 2D-to-3D conversion method for soccer videos. Our method is data driven, relying on a reference database of 3D videos. Our key insight is that we use computer generated depth from current computer sports games for creating a synthetic 3D database.

Details

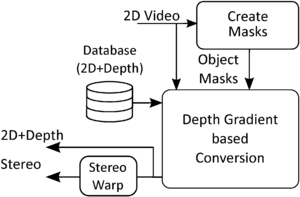

The figure below shows an overview of our conversion system. We infer depth from a database of synthetically generated high-quality depths, collected from video games. We then perform the conversion by transferring the depth gradient field from the database and reconstructing depth using Poisson reconstruction. In order to maintain sharp and accurate object boundaries, we create object masks and modify the Poisson equation on object boundaries. Finally, using the 2D frames and their estimated depth the left and right stereo pairs are rendered using a stereo-warping technique.

| The proposed 2D-to-3D conversion system |

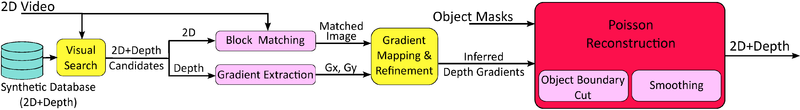

The main components of of our depth gradient based conversion technique are as follows:

- Visual Search: For each frame of the query video we identify the K most similar frames to it based on GIST and color.

- Block-based Matching: Using the K candidate frames we construct a matching image which is similar to the query frame and provides a mapping between the candidates and the query frame. To construct this matching image, we divide the query frame into small blocks and compare each block against all possible blocks in the K candidates. The block with the smallest Euclidean distance is chosen as the corresponding block.

- Poisson Reconstruction: We copy the corresponding depth gradients from the matched image to the query frame and reconstruct the depth values from the copied depth gradients using the Poisson equation.

- Object Boundary Cuts: In order to maintain sharp and accurate object boundaries, we create object masks, detect their edges through Canny edge detector, and disconnect pixels from the object boundaries by not allowing them to use an object boundary pixel as a valid neighbor.

- Smoothing: We add smoothness constraints to the Poisson reconstruction by enforcing the higher-order depth derivatives to be zero.

| The main components of our depth gradient based conversion |

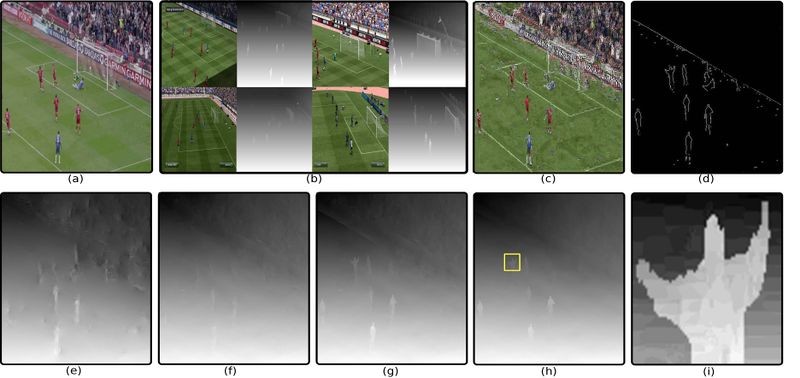

| The effect of each step in our depth estimation technique: (a) Query, (b) A subset of its K candidates, (c) Created matched image, (d) Object boundary cuts, (e) Depth estimation using Poisson reconstruction, (f) Gradient refinement and Poisson reconstruction, (g) Depth with object boundary cuts, (h) Final depth estimation with smoothness, and (i) The zoomed and amplified version of the yellow block in h. |

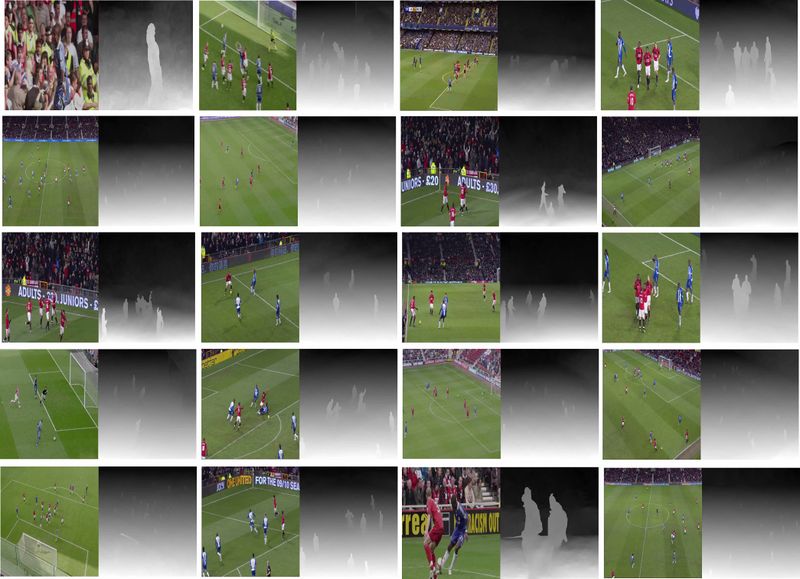

The following figure shows some results of our depth estimation technique. Note how we can handle a wide variety of video shots, including different camera

views.

| Depth estimation for a wide variety of soccer shots using our method |

Publications

K. Calagari, M. Elgharib, P. Didyk, A. Kaspar, W. Matusik, and M. Hefeeda, “Gradient-based 2D-to-3D Conversion for Soccer Videos”, In Proc. of the ACM Multimedia conference (MM’15), p 331-340, October 2015.

K. Calagari, M. Elgharib, P. Didyk, A. Kaspar, W. Matusik, and M. Hefeeda, "Data Driven 2-D-to-3-D Video Conversion for Soccer", IEEE Transactions on Multimedia (TMM), Vol. 20, Issue 3, p 605-619, 2018