Immersive Content Generation from Standard 2D Videos

People

- Kiana Calagari

- Mohamed Hefeeda

Abstract

The aim of this project is to create compelling immersive videos suitable for VR (virtual reality) devices using only standard 2D videos. The focus of the work is on field sports such as soccer, hockey, basketball, etc. Currently the only way to create immersive content is by using multiple cameras and 360 camera rigs. This means that in addition to the already existing standard 2D cameras around the field, an expensive infrastructure should be added and managed in order to shoot and generate immersive content. In this project, however, we propose a more favorable alternative in which we can utilize the content of the already existing standard 2D cameras around the field to generate an immersive video.

| The aim is to create compelling immersive videos using only standard 2D videos. |

Details

Setup

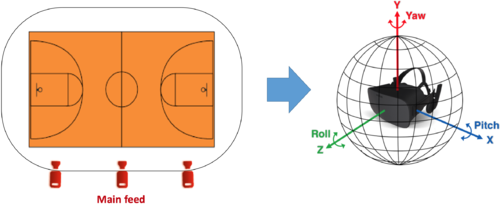

We assume a setup in which we have at least 3 cameras as follows. Note that such camera setup is a practical setup in capturing and broadcasting field sports and the following cameras usually exist.

- The main camera, located in the middle of the field. This camera is a rotating camera capturing wide views and following the ball around the field. It is usually the main camera used for broadcasting games, and most of the feed that audience view comes from this camera.

- A camera on the right side of the field which covers the players on the right that might be missing in the main camera. This camera doesn't necessarily have to be rotating.

- A camera on the left side of the field which covers the players on the left that might be missing in the main camera. This camera doesn't necessarily have to be rotating.

Process

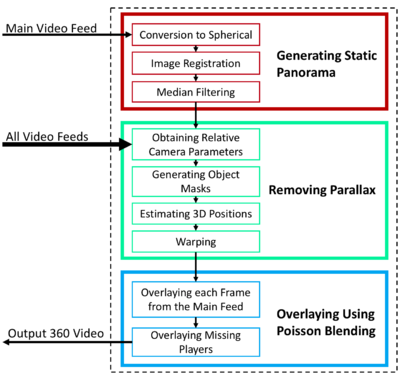

The main steps for generating an immersive video from 2D cameras around the field can be described as follows:

- Generating a still panorama using the motion of the main camera: The viewing angle in regular sports videos is usually not wide enough for an immersive experience. In order to improve the sense of presence, a wider viewing angle is needed. As a result,we increase the viewing angle by utilizing the camera rotation, and generating a panorama image which includes the static parts of the scene. The camera rotation is transformed to a wider viewing angle by aligning the frames using image registration techniques, and applying median filtering.

- Removing parallax between all video feeds: In a regular sports production the cameras are usually placed meters away from each other, causing a huge amount of parallax between them. By estimating the 3D pixel positions and the relative camera parameters, we warp each video feed to the position of the main camera to remove such parallax.

- Overlaying frames on the panorama: To seamlessly blend the copied parts with the background, we use Poisson blending. For each frame, we first overlay the main feed. Players missing from the main feed are then identified and copied from the left and right video feeds.

| The main steps of our technique. |

The following figure shows examples of final panoramas generated by our technique. The blue arrows indicate the missing players that were copied from the left and right feeds.

| Examples of final panoramas generated by our technique for different games: basketball (top), hockey (middle), and

volleyball (bottom). The blue arrows indicate the players that have been copied from the left or right feeds. |

Publications

K. Calagari, M. Elgharib, S. Shirmohammadi, and M. Hefeeda, “Sports VR Content Generation from Regular Camera Feeds”, In Proc. of the ACM Multimedia (MM’17), p 699-707, October 2017.