Cloud Gaming

Cloud gaming is a large, rapidly growing, multi-billion-dollar industry. Cloud gaming enables users to play games on thin clients such as tablets, smartphones, and smart TVs without worrying about processing power, memory size, graphics card capabilities. It allows high-quality games to be played virtually on any device and anywhere, without the need for high-end gaming consoles or installing/updating software. This significantly increases the potential number of users and thus the market size. Most major IT companies offer cloud gaming services, such as Sony PlayStation Now, Google Stadia, Nvidia GeForce Now, and Amazon Tempo.

Cloud gaming essentially moves the game logic and rendering from the user’s device to the cloud. As a result, the entire game runs on the cloud and the rendered scenes are then streamed to users in real-time. Rendering and streaming from the cloud, however, substantially increase the required bandwidth to serve gaming clients. Moreover, given the large-scale and heterogeneity of clients, numerous streams need to be created and served from the cloud in real-time, which creates a major challenge for cloud gaming providers. Thus, minimizing the resources needed to render, encode, customize, and deliver gaming streams to millions of users is an important problem. This problem gets more complex when we consider advanced and next-generation games such as ultra-high definition and immersive games, which are getting popular.

In this project, we partner with AMD Canada with the goal of designing next-generation cloud gaming systems that optimize the quality, bitrate, and delay, which will not only improve the quality of experience for both the players and viewers, but will also reduce the cost and minimize resources for service providers.

People

- Haseeb Ur Rehman (PhD student, University of Ottawa)

- Ammar Rashed (PhD student, University of Ottawa)

- Deniz Ugur (MSc student)

- Ghazaleh Bakhtiariazad (MSc Stduent)

- Khaled Al Butainy (MSc Stduent)

- Mohamed Hegazy (MSc student, graduated)

- Ihab Amer (AMD Fellow)

DeepGame: Efficient Video Encoding for Cloud Gaming

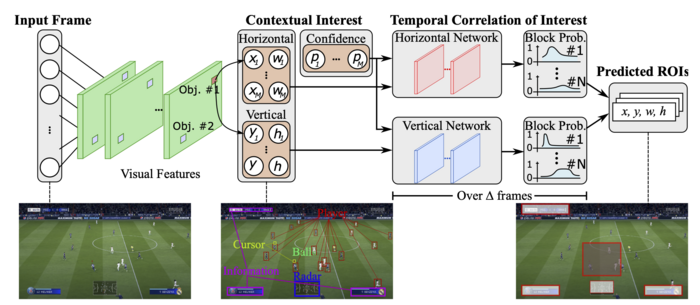

Cloud gaming enables users to play games on virtually any device. This is achieved by offloading the game rendering and encoding to cloud datacenters. As game resolutions and frame rates increase, cloud gaming platforms face a major challenge to stream high-quality games due to the high bandwidth and low latency requirements. We propose a new video encoding pipeline, called DeepGame, for cloud gaming platforms to reduce the bandwidth requirements with limited to no impact on the player quality of experience. DeepGame learns the player’s contextual interest in the game and the temporal correlation of that interest using a spatio-temporal deep neural network. Then, it encodes various areas in the video frames with different quality levels proportional to their contextual importance. DeepGame does not change the source code of the video encoder or the video game, and it does not require any additional hardware or software at the client side. We implemented DeepGame in an open-source cloud gaming platform and evaluated its performance using multiple popular games. We also conducted a subjective study with real players to demonstrate the potential gains achieved by DeepGame and its practicality. Our results show that DeepGame can reduce the bandwidth requirements by up to 36% compared to the baseline encoder, while maintaining the same level of perceived quality for players and running in real time.

Code and Datasets

Publications

- O. Mosaad, K. Diab, I. Amer, and M. Hefeeda, DeepGame Efficient Video Encoding for Cloud Gaming, In Proc. of ACM Multimedia Conference (MM'21), Chengdu, China, October 2021.

- M. Hegazy, K. Diab, M. Saeedi, B. Ivanovic, I. Amer, Y. Liu, G. Sines, and M. Hefeeda, Content-aware Video Encoding for Cloud Gaming. In Proc. of ACM Multimedia Systems Conference (MMSys'19), Amherst, MA, June 2019. (received the Best Student Paper Award and the ACM Artifacts Evaluated and Functional badge)