Private:leung

Summary of accomplishments by Gordon Leung.

16 Aug 2010

- Debugged errors that prevented data to be written to the TS file.

- Streamed the test.mp4 file to another computer with the use of SDP file.

- Not able to stream old TS files to VLC. (Cheng mentioned this might not work until the correct PSISI data is generated)

9 Aug 2010

- Got the nokia TS file to stream to VLC using the SDP file.

- Tried to get our TS file to stream to VLC using a modified SDP file, but so far it does not work.

2 Aug 2010

- Still trying to stream the nokia TS file to VLC.

26 July 2010

- Transmitted TS files and used DiviCache to verify bursts. TS files with mpe and mpe-fec are showing up.

- Tried to stream the TS file to VLC, but it does not work.

- Documented MPE-FEC

19 July 2010

- Fixed major bug in Burst::enc_ts(); This solved the issue I was dealing with; Updated the known issues page.

- Did Testing with new Burst::enc_ts(). dvbSAM shows all MPE and MPE-FEC sections are now good.

- Updated Status of Integration Tests in Design Document

- Looking over ESG and STAND algorithm.

TODO:

More integration testing

12 July 2010

- Updated Unit Test Case document for MPE-FEC

- Tried to figure out bug by analyzing more TS files, and using gdb. Eventually wrote a report on the bug. See Known Issues page.

TODO:

More integration testing

5 July 2010

- Fixed input values when packing mpe headers in burst::enc_mpe()

- Finished creating the test class for mpe_fec functions.

- Analyzed more TS files. Generated and validated TS file with mpe-fec. dvbSAM shows that the RS code has corrected thousands of bytes which is not expected. The number of bytes corrected fluctuates between bursts. Some of the bursts have excess zeros which account for the number of bytes corrected, but not quite sure why.

TODO:

More integration testing, analyze TS files in more detail

Update Unit Test Case document

28 June 2010

- Used dvbSAM to analyze TS files.

- Creating a test class to test mpe_fec_frame and mpe_fec_pkt functions.

FINDINGS: Generating a valid TS file using STAND or LATS does not seem to work regardless of mpe-fec.

"Section number" parameter in MPE PKT seems incorrect when packing header. I do not know why it is being incremented.

21 June 2010

- Adjusted the LATS and STAND algorithm to produce the correct deltaT value when mpe-fec is enabled.

- Minor code edits in burst.cpp and transmitter.cpp to enable mpe-fec.

- Installed dvbSAM

TODO: Figure out where to modify algorithm to accommodate the extra MPE-FEC data for the rest of the algorithms *having difficulty*

Test TS file using dvbSAM

14 June 2010

- Updated Design document to include mpe-fec information and unit test cases.

- Integrated mpe-fec code with existing code.

- Reading documentation on DVBSNOOP

TODO: Figure out where to modify algorithm to accommodate the extra MPE-FEC data. *having difficulty*

Couldn't edit figure in design doc cause mpost is not installed.

7 June 2010

- Learned LaTeX

- Learned MetaUML

- Added mpe_fec_pkt and mpe_fec_frame to repository.

- Started to edit existing code to integrate my code

INTERFACE INFO(mpe_fec_frame):

Interacts with burst and mpe_fec_pkt class.

- burst: Creates an instance of mpe_fec_frame. Calls MPE_FEC_Frame_writeNextByte(char byte) to write bytes into frame. Calls MPE_FEC_Frame_finalize() to compute the RS parity data.

- mpe_fec_pkt: Takes the finalized mpe_fec_frame when creating a new instance of mpe_fec_pkt

INTERFACE INFO(mpe_fec_pkt):

Interacts with mpe_fec_frame and burst class.

- mpe_fec_frame: Gives the finalized mpe_fec_frame to create a new instance of mpe_fec_pkt

- burst: Creates new instances of mpe_fec_pkts, calls the update(...) and pack() methods to fill in the header.

TODO:

update documentation, finish integration, and then test

31 May 2010

Created 2 classes: MPE_FEC_Frame and MPE_FEC_PKT.

MPE_FEC_Frame takes in IP Datagrams and computes the RS codes. MPE_FEC_PKT contains the MPE-FEC section specification specified in the standard.

I have tested the functions in the MPE_FEC_Frame and MPE_FEC_PKT classes. They seem to be working as expected. However, I am not sure how to verify the CRC calculation.

TODO: Figure out the changes needed in the existing code in order to integrate my code.

24 May 2010

Test cases without padding, but with erasures: (Errors are introduced after encoding)

- Introduced 0, 16, 32, 33, 48, 64, 65 errors in an App Data row => Worked as expected.

- Introduced 0, 16, 32, 33, 48, 64 errors in a RS Data row => Worked as expected.

- Introduced 0, 32, 33, 64, 65 errors in a row of the MPE-FEC frame (App Data + RS Data) => Worked as Expected

Given erasure data, the total number of errors fixable within any block of data is now 64 regardless of position.

Test cases with padding and with erasures: (Testing this was a bit more tricky)

- Introduced 1 padding column with 32, 33, 64, and 65 continuous errors in App Data table => Worked as expected.

- Introduced 32 padding columns with 32, 33, 64, and 65 continuous errors in App Data table => Worked as expected.

- Introduced 64 padding columns with 32, 33, 64, 65, 128, and 129 continuous errors in App Data table => Worked as expected.

Basically, the padding columns are ignored and marked as reliable. The max number of correctable errors given erasure data and ignoring padded columns per block still stands at 64.

Correcting 64 errors is the BEST that can be done for our RS code.

Puncturing is possible without changes to the library.

Everything seems to be working according to the Standard except padding. Will look into it.

UPDATE: Padding will work according to the Standard if we ignore the padding parameter given in the library.

Read over the FEC standard

Reading FEC-related code from FATCAPS implementation.

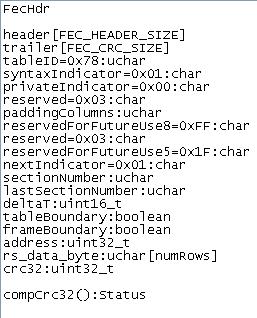

Next Steps: Define FecHdr class

UPDATE: need feedback on proposed FecHdr design

17 May 2010

- Learning C++

- Learning Unix

- Compiled FEC library on Linux. It does not work on Windows. Also, you must disable compiler optimization.

- Complied C++ code that uses the library to encode and decode random data stored in memory.

The library encodes and decodes an MPE-FEC frame one row at a time.

Test cases without padding: (Errors are introduced after encoding)

- Introduced 0, 16, 32, 33, 38 continuous errors in each row of the App Data table => Worked as expected.

- Introduced 16, 32, 33, 38 errors spaced apart from each other in a App Data table row => Worked as expected.

- Introduced 0, 16, 32, 33, 38 continuous errors in each row of the RS table => Worked as expected.

- Introduced 16, 32, 33, 38 errors spaced apart from each other in a RS table row => Worked as expected.

- Introduced 16,17 continuous errors in both the App Data table and RS table => Worked as expected.

- Introduced 16,17 errors spread apart in both the App Data table and RS table => Worked as expected.

All of these test cases point to the fact that the current number of max errors fixable in a block of data is 32 regardless of where the errors occur in the block. With CRC, it should be possible to correct up to 64 errors. I don't know whether CRC is implemented in the system.

The library handles padding slightly differently than the Standard Specification. The library assumes the padding columns are on the leftmost of the App Data table whereas the Standard assumes the padding columns are on the rightmost of the App Data table. The library's padding parameter seems to work as expected.

Test cases with padding:

- Introduced 1 padding column with 32 and 33 continuous errors in App Data table => Worked as expected

- Introduced 32 padding columns with 64 and 65 continuous errors in App Data table => Worked as expected

Basically, the padding columns are ignored and marked as reliable. The max number of correctable errors ignoring padded columns per block still stands at 32.

Next Steps: Test with some text file. Need function to write data into the MPE-FEC frame.

UPDATE: Testing with a text file does not seem necessary anymore. Having data stored in an array is sufficient for testing.